Extending the Machine Learning Abstraction Boundary: A Complex Systems Approach to Incorporate Societal Context

Paper and Code

Jun 17, 2020

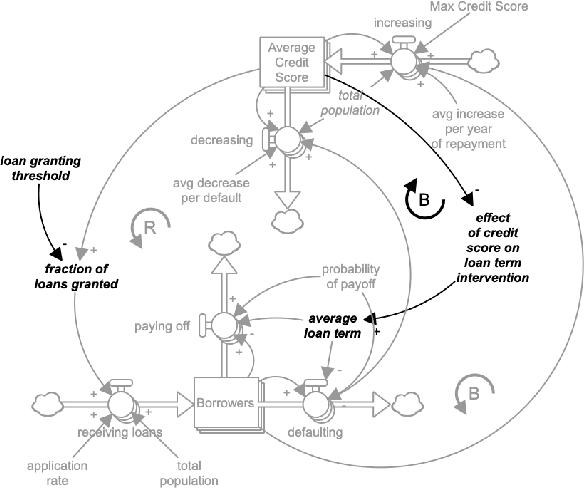

Machine learning (ML) fairness research tends to focus primarily on mathematically-based interventions on often opaque algorithms or models and/or their immediate inputs and outputs. Such oversimplified mathematical models abstract away the underlying societal context where ML models are conceived, developed, and ultimately deployed. As fairness itself is a socially constructed concept that originates from that societal context along with the model inputs and the models themselves, a lack of an in-depth understanding of societal context can easily undermine the pursuit of ML fairness. In this paper, we outline three new tools to improve the comprehension, identification and representation of societal context. First, we propose a complex adaptive systems (CAS) based model and definition of societal context that will help researchers and product developers to expand the abstraction boundary of ML fairness work to include societal context. Second, we introduce collaborative causal theory formation (CCTF) as a key capability for establishing a sociotechnical frame that incorporates diverse mental models and associated causal theories in modeling the problem and solution space for ML-based products. Finally, we identify community based system dynamics (CBSD) as a powerful, transparent and rigorous approach for practicing CCTF during all phases of the ML product development process. We conclude with a discussion of how these systems theoretic approaches to understand the societal context within which sociotechnical systems are embedded can improve the development of fair and inclusive ML-based products.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge