ExamGAN and Twin-ExamGAN for Exam Script Generation

Paper and Code

Aug 22, 2021

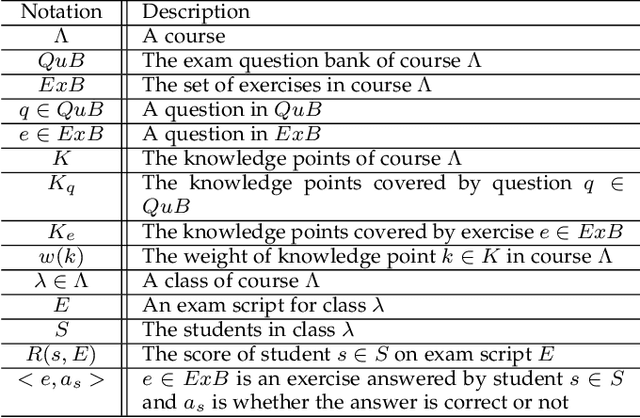

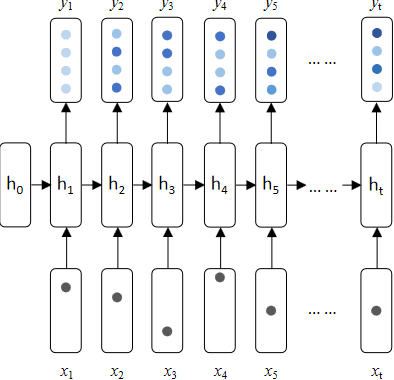

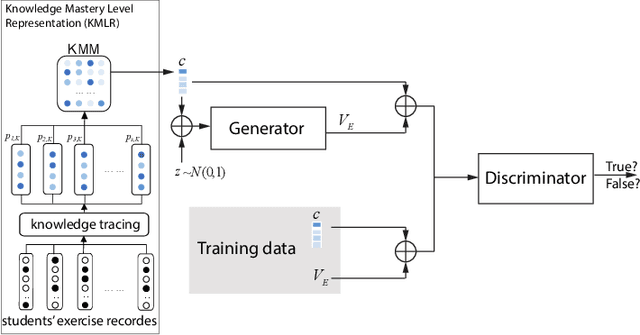

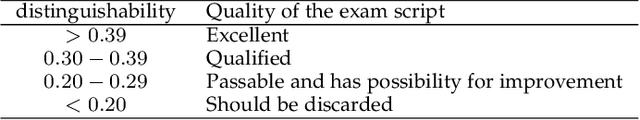

Nowadays, the learning management system (LMS) has been widely used in different educational stages from primary to tertiary education for student administration, documentation, tracking, reporting, and delivery of educational courses, training programs, or learning and development programs. Towards effective learning outcome assessment, the exam script generation problem has attracted many attentions and been investigated recently. But the research in this field is still in its early stage. There are opportunities to further improve the quality of generated exam scripts in various aspects. In particular, two essential issues have been ignored largely by existing solutions. First, given a course, it is unknown yet how to generate an exam script which can result in a desirable distribution of student scores in a class (or across different classes). Second, while it is frequently encountered in practice, it is unknown so far how to generate a pair of high quality exam scripts which are equivalent in assessment (i.e., the student scores are comparable by taking either of them) but have significantly different sets of questions. To fill the gap, this paper proposes ExamGAN (Exam Script Generative Adversarial Network) to generate high quality exam scripts, and then extends ExamGAN to T-ExamGAN (Twin-ExamGAN) to generate a pair of high quality exam scripts. Based on extensive experiments on three benchmark datasets, it has verified the superiority of proposed solutions in various aspects against the state-of-the-art. Moreover, we have conducted a case study which demonstrated the effectiveness of proposed solution in a real teaching scenario.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge