Exact Feature Collisions in Neural Networks

Paper and Code

May 31, 2022

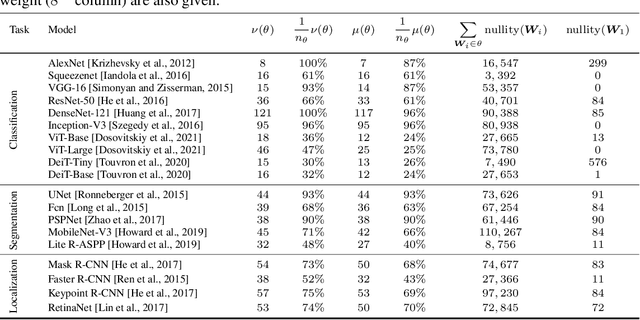

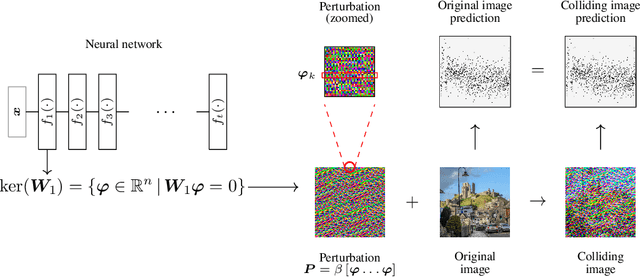

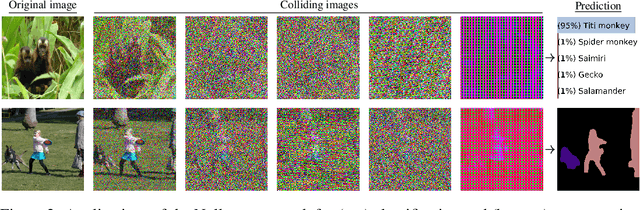

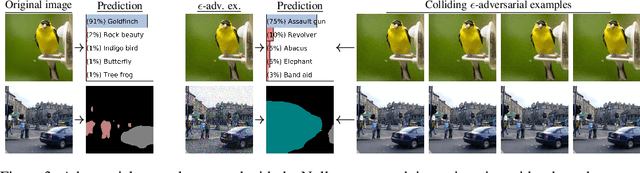

Predictions made by deep neural networks were shown to be highly sensitive to small changes made in the input space where such maliciously crafted data points containing small perturbations are being referred to as adversarial examples. On the other hand, recent research suggests that the same networks can also be extremely insensitive to changes of large magnitude, where predictions of two largely different data points can be mapped to approximately the same output. In such cases, features of two data points are said to approximately collide, thus leading to the largely similar predictions. Our results improve and extend the work of Li et al.(2019), laying out theoretical grounds for the data points that have colluding features from the perspective of weights of neural networks, revealing that neural networks not only suffer from features that approximately collide but also suffer from features that exactly collide. We identify the necessary conditions for the existence of such scenarios, hereby investigating a large number of DNNs that have been used to solve various computer vision problems. Furthermore, we propose the Null-space search, a numerical approach that does not rely on heuristics, to create data points with colliding features for any input and for any task, including, but not limited to, classification, localization, and segmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge