Estimating Risk and Uncertainty in Deep Reinforcement Learning

Paper and Code

Jun 07, 2019

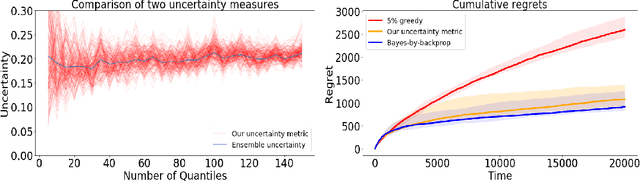

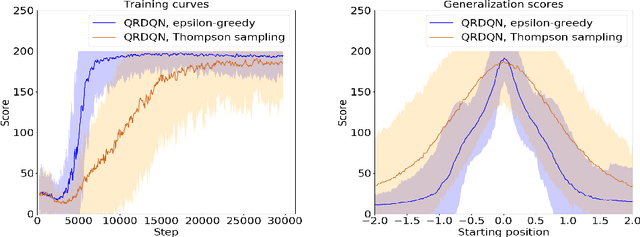

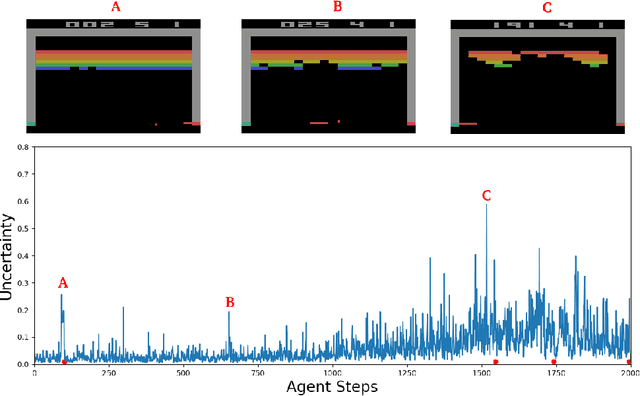

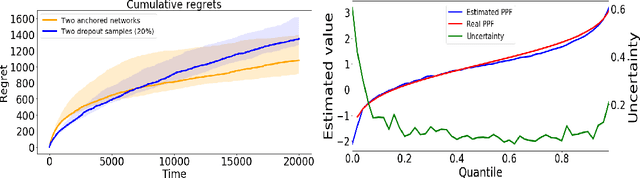

This paper demonstrates a novel method for separately estimating aleatoric risk and epistemic uncertainty in deep reinforcement learning. Aleatoric risk, which arises from inherently stochastic environments or agents, must be accounted for in the design of risk-sensitive algorithms. Epistemic uncertainty, which stems from limited data, is important both for risk-sensitivity and to efficiently explore an environment. We first present a Bayesian framework for learning the return distribution in reinforcement learning, which provides theoretical foundations for quantifying both types of uncertainty. Based on this framework, we show that the disagreement between only two neural networks is sufficient to produce a low-variance estimate of the epistemic uncertainty on the return distribution, thus providing a simple and computationally cheap uncertainty metric. We demonstrate experiments that illustrate our method and some applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge