Enhancing Local Feature Learning for 3D Point Cloud Processing using Unary-Pairwise Attention

Paper and Code

Mar 17, 2022

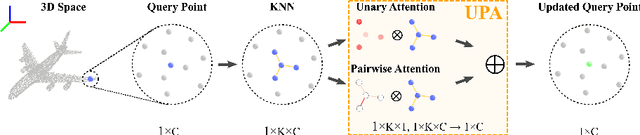

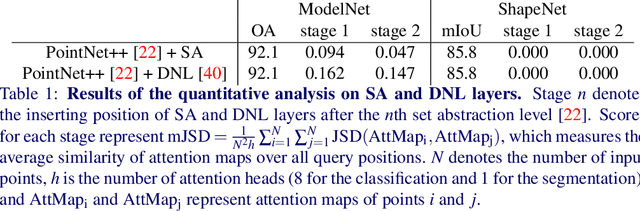

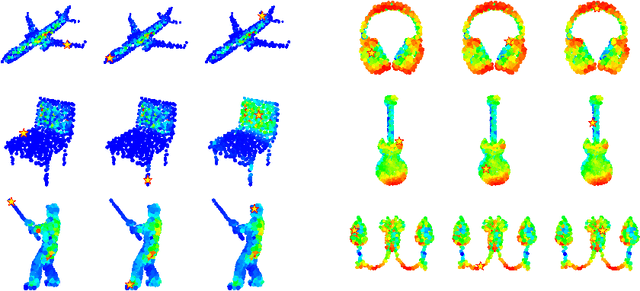

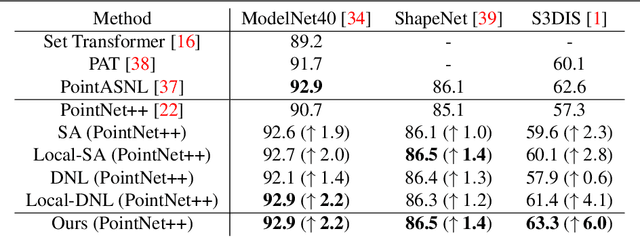

We present a simple but effective attention named the unary-pairwise attention (UPA) for modeling the relationship between 3D point clouds. Our idea is motivated by the analysis that the standard self-attention (SA) that operates globally tends to produce almost the same attention maps for different query positions, revealing difficulties for learning query-independent and query-dependent information jointly. Therefore, we reformulate the SA and propose query-independent (Unary) and query-dependent (Pairwise) components to facilitate the learning of both terms. In contrast to the SA, the UPA ensures query dependence via operating locally. Extensive experiments show that the UPA outperforms the SA consistently on various point cloud understanding tasks including shape classification, part segmentation, and scene segmentation. Moreover, simply equipping the popular PointNet++ method with the UPA even outperforms or is on par with the state-of-the-art attention-based approaches. In addition, the UPA systematically boosts the performance of both standard and modern networks when it is integrated into them as a compositional module.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge