End-to-end multi-modal product matching in fashion e-commerce

Paper and Code

Mar 18, 2024

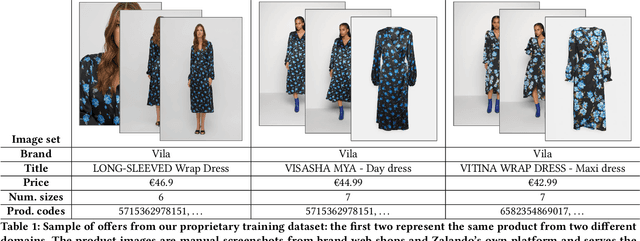

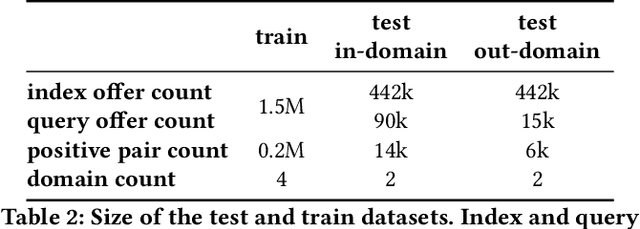

Product matching, the task of identifying different representations of the same product for better discoverability, curation, and pricing, is a key capability for online marketplace and e-commerce companies. We present a robust multi-modal product matching system in an industry setting, where large datasets, data distribution shifts and unseen domains pose challenges. We compare different approaches and conclude that a relatively straightforward projection of pretrained image and text encoders, trained through contrastive learning, yields state-of-the-art results, while balancing cost and performance. Our solution outperforms single modality matching systems and large pretrained models, such as CLIP. Furthermore we show how a human-in-the-loop process can be combined with model-based predictions to achieve near perfect precision in a production system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge