Enabling Capsule Networks at the Edge through Approximate Softmax and Squash Operations

Paper and Code

Jun 21, 2022

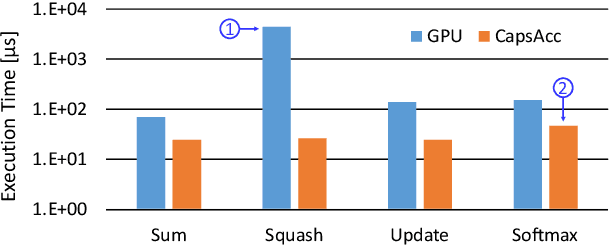

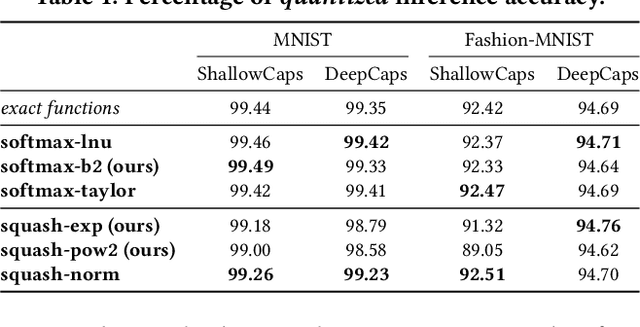

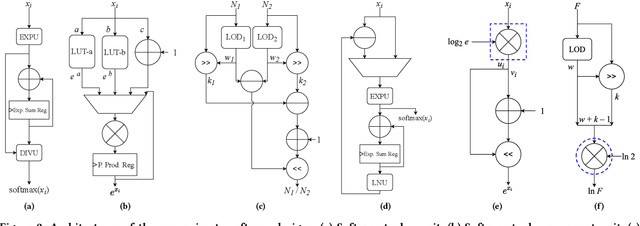

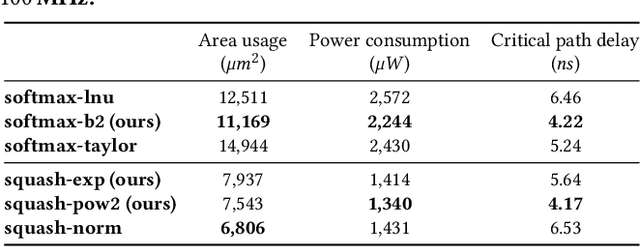

Complex Deep Neural Networks such as Capsule Networks (CapsNets) exhibit high learning capabilities at the cost of compute-intensive operations. To enable their deployment on edge devices, we propose to leverage approximate computing for designing approximate variants of the complex operations like softmax and squash. In our experiments, we evaluate tradeoffs between area, power consumption, and critical path delay of the designs implemented with the ASIC design flow, and the accuracy of the quantized CapsNets, compared to the exact functions.

* To appear at the ACM/IEEE International Symposium on Low Power

Electronics and Design (ISLPED), August 2022, Boston, MA, USA

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge