EMVLight: A Decentralized Reinforcement Learning Framework for Efficient Passage of Emergency Vehicles

Paper and Code

Sep 19, 2021

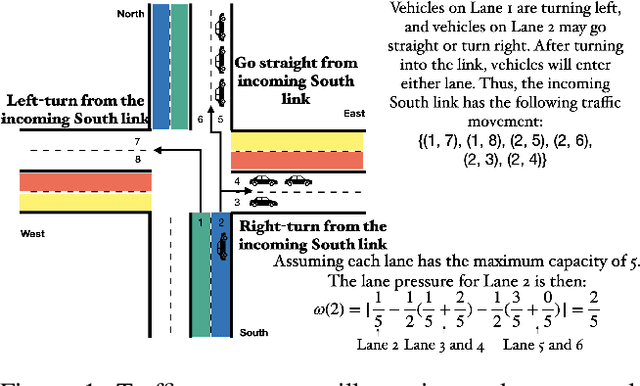

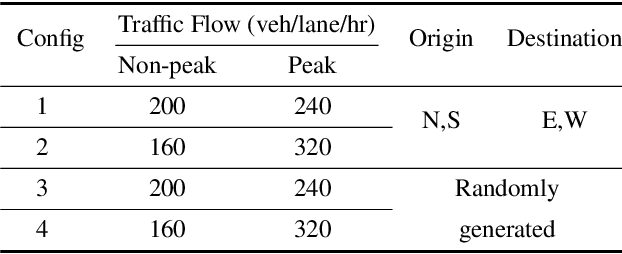

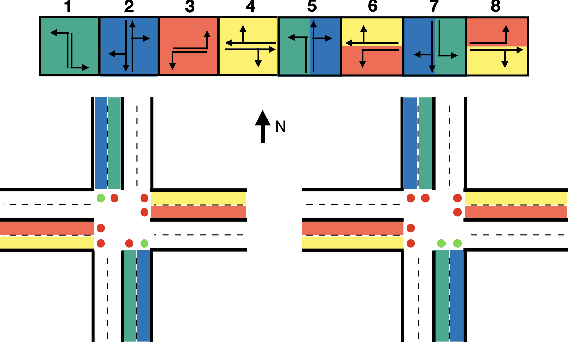

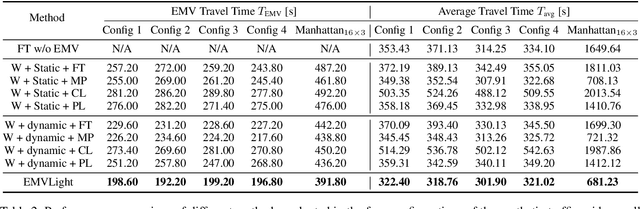

Emergency vehicles (EMVs) play a crucial role in responding to time-critical events such as medical emergencies and fire outbreaks in an urban area. The less time EMVs spend traveling through the traffic, the more likely it would help save people's lives and reduce property loss. To reduce the travel time of EMVs, prior work has used route optimization based on historical traffic-flow data and traffic signal pre-emption based on the optimal route. However, traffic signal pre-emption dynamically changes the traffic flow which, in turn, modifies the optimal route of an EMV. In addition, traffic signal pre-emption practices usually lead to significant disturbances in traffic flow and subsequently increase the travel time for non-EMVs. In this paper, we propose EMVLight, a decentralized reinforcement learning (RL) framework for simultaneous dynamic routing and traffic signal control. EMVLight extends Dijkstra's algorithm to efficiently update the optimal route for the EMVs in real time as it travels through the traffic network. The decentralized RL agents learn network-level cooperative traffic signal phase strategies that not only reduce EMV travel time but also reduce the average travel time of non-EMVs in the network. This benefit has been demonstrated through comprehensive experiments with synthetic and real-world maps. These experiments show that EMVLight outperforms benchmark transportation engineering techniques and existing RL-based signal control methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge