Emotion and Theme Recognition in Music with Frequency-Aware RF-Regularized CNNs

Paper and Code

Oct 28, 2019

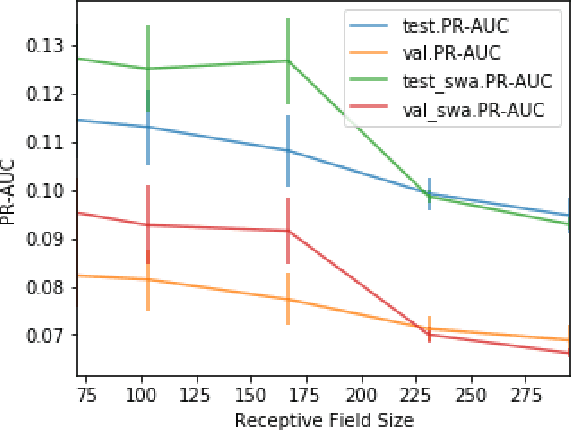

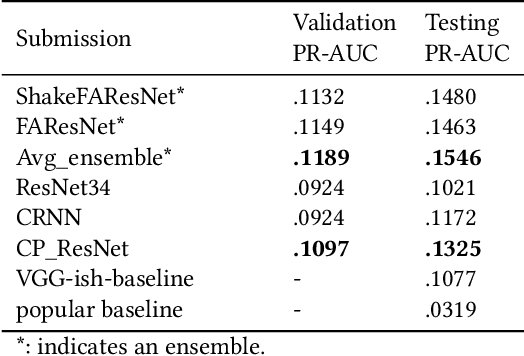

We present CP-JKU submission to MediaEval 2019; a Receptive Field-(RF)-regularized and Frequency-Aware CNN approach for tagging music with emotion/mood labels. We perform an investigation regarding the impact of the RF of the CNNs on their performance on this dataset. We observe that ResNets with smaller receptive fields -- originally adapted for acoustic scene classification -- also perform well in the emotion tagging task. We improve the performance of such architectures using techniques such as Frequency Awareness and Shake-Shake regularization, which were used in previous work on general acoustic recognition tasks.

* MediaEval`19, 27-29 October 2019, Sophia Antipolis, France

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge