Eluder Dimension and Generalized Rank

Paper and Code

Apr 14, 2021

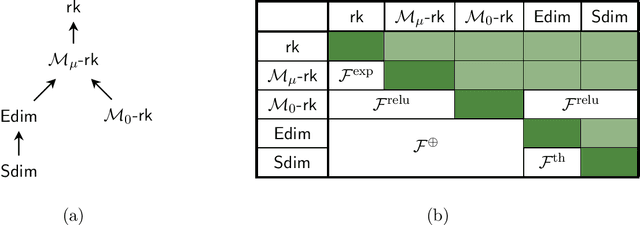

We study the relationship between the eluder dimension for a function class and a generalized notion of rank, defined for any monotone "activation" $\sigma : \mathbb{R} \to \mathbb{R}$, which corresponds to the minimal dimension required to represent the class as a generalized linear model. When $\sigma$ has derivatives bounded away from $0$, it is known that $\sigma$-rank gives rise to an upper bound on eluder dimension for any function class; we show however that eluder dimension can be exponentially smaller than $\sigma$-rank. We also show that the condition on the derivative is necessary; namely, when $\sigma$ is the $\mathrm{relu}$ activation, we show that eluder dimension can be exponentially larger than $\sigma$-rank.

* Technical Note

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge