EGO-CH: Dataset and Fundamental Tasks for Visitors BehavioralUnderstanding using Egocentric Vision

Paper and Code

Feb 03, 2020

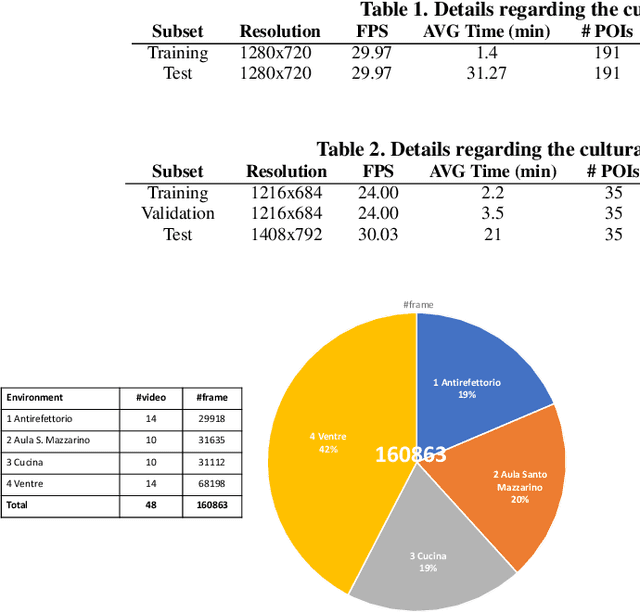

Equipping visitors of a cultural site with a wearable device allows to easily collect information about their preferences which can be exploited to improve the fruition of cultural goods with augmented reality. Moreover, egocentric video can be processed using computer vision and machine learning to enable an automated analysis of visitors' behavior. The inferred information can be used both online to assist the visitor and offline to support the manager of the site. Despite the positive impact such technologies can have in cultural heritage, the topic is currently understudied due to the limited number of public datasets suitable to study the considered problems. To address this issue, in this paper we propose EGOcentric-Cultural Heritage (EGO-CH), the first dataset of egocentric videos for visitors' behavior understanding in cultural sites. The dataset has been collected in two cultural sites and includes more than $27$ hours of video acquired by $70$ subjects, with labels for $26$ environments and over $200$ different Points of Interest. A large subset of the dataset, consisting of $60$ videos, is associated with surveys filled out by real visitors. To encourage research on the topic, we propose $4$ challenging tasks (room-based localization, point of interest/object recognition, object retrieval and survey prediction) useful to understand visitors' behavior and report baseline results on the dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge