Efficient Structure-preserving Support Tensor Train Machine

Paper and Code

Feb 12, 2020

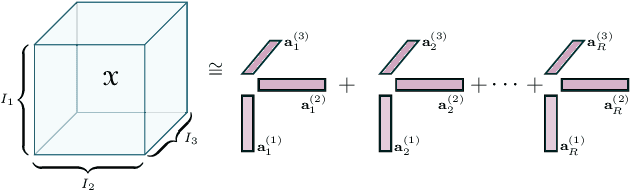

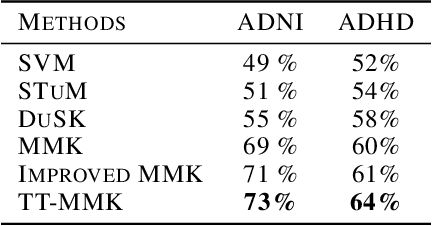

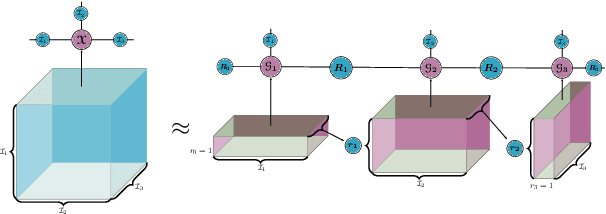

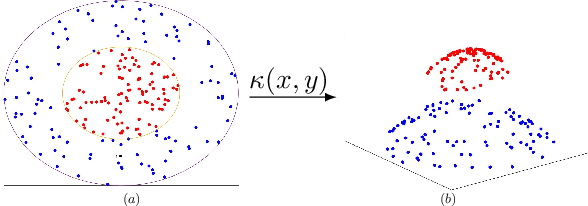

Deploying the multi-relational tensor structure of a high dimensional feature space, more efficiently improves the performance of machine learning algorithms. One encounters the \emph{curse of dimensionality}, and working with vectorized data fails to preserve the data structure. To mitigate the nonlinear relationship of tensor data more economically, we propose the \emph{Tensor Train Multi-way Multi-level Kernel (TT-MMK)}. This technique combines kernel filtering of the initial input data (\emph{Kernelized Tensor Train (KTT)}), stable reparametrization of the KTT in the Canonical Polyadic (CP) format, and the Dual Structure-preserving Support Vector Machine (\emph{SVM}) Kernel for revealing nonlinear relationships. We demonstrate numerically that the TT-MMK method is more reliable computationally, is less sensitive to tuning parameters, and gives higher prediction accuracy in the SVM classification compared to similar tensorised SVM methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge