ECLM: Efficient Edge-Cloud Collaborative Learning with Continuous Environment Adaptation

Paper and Code

Nov 18, 2023

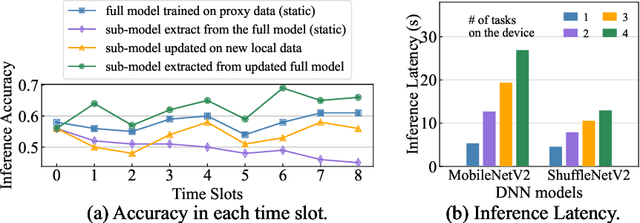

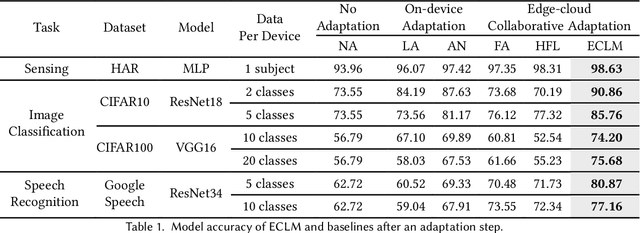

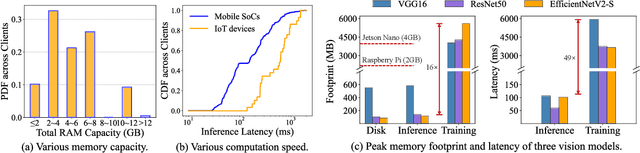

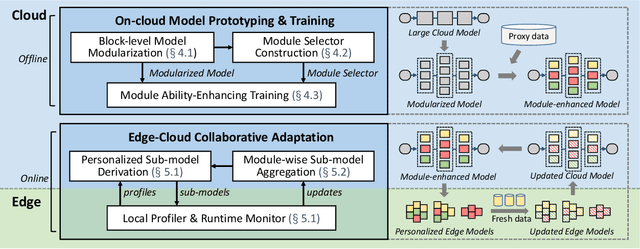

Pervasive mobile AI applications primarily employ one of the two learning paradigms: cloud-based learning (with powerful large models) or on-device learning (with lightweight small models). Despite their own advantages, neither paradigm can effectively handle dynamic edge environments with frequent data distribution shifts and on-device resource fluctuations, inevitably suffering from performance degradation. In this paper, we propose ECLM, an edge-cloud collaborative learning framework for rapid model adaptation for dynamic edge environments. We first propose a novel block-level model decomposition design to decompose the original large cloud model into multiple combinable modules. By flexibly combining a subset of the modules, this design enables the derivation of compact, task-specific sub-models for heterogeneous edge devices from the large cloud model, and the seamless integration of new knowledge learned on these devices into the cloud model periodically. As such, ECLM ensures that the cloud model always provides up-to-date sub-models for edge devices. We further propose an end-to-end learning framework that incorporates the modular model design into an efficient model adaptation pipeline including an offline on-cloud model prototyping and training stage, and an online edge-cloud collaborative adaptation stage. Extensive experiments over various datasets demonstrate that ECLM significantly improves model performance (e.g., 18.89% accuracy increase) and resource efficiency (e.g., 7.12x communication cost reduction) in adapting models to dynamic edge environments by efficiently collaborating the edge and the cloud models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge