EARN Fairness: Explaining, Asking, Reviewing and Negotiating Artificial Intelligence Fairness Metrics Among Stakeholders

Paper and Code

Jul 16, 2024

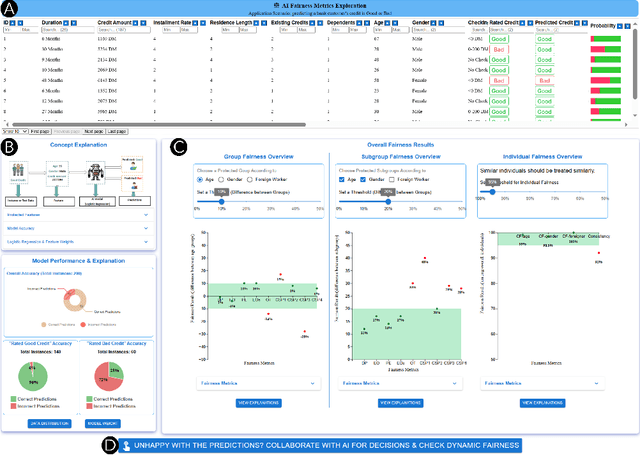

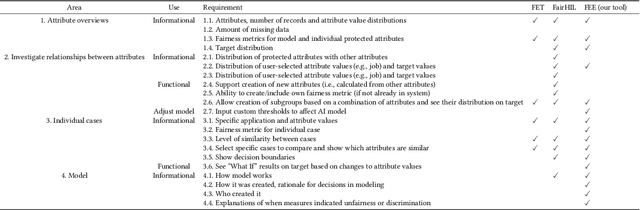

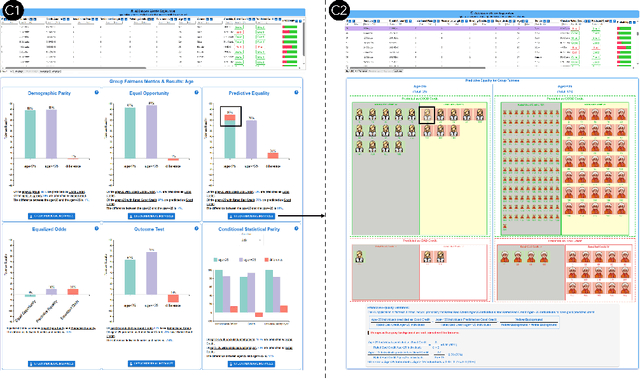

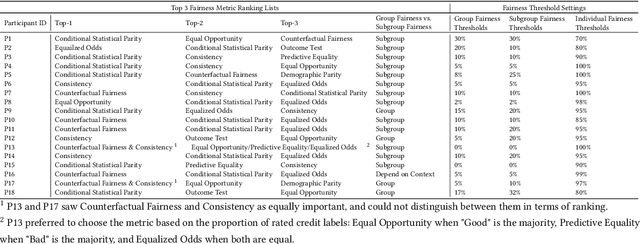

Numerous fairness metrics have been proposed and employed by artificial intelligence (AI) experts to quantitatively measure bias and define fairness in AI models. Recognizing the need to accommodate stakeholders' diverse fairness understandings, efforts are underway to solicit their input. However, conveying AI fairness metrics to stakeholders without AI expertise, capturing their personal preferences, and seeking a collective consensus remain challenging and underexplored. To bridge this gap, we propose a new framework, EARN Fairness, which facilitates collective metric decisions among stakeholders without requiring AI expertise. The framework features an adaptable interactive system and a stakeholder-centered EARN Fairness process to Explain fairness metrics, Ask stakeholders' personal metric preferences, Review metrics collectively, and Negotiate a consensus on metric selection. To gather empirical results, we applied the framework to a credit rating scenario and conducted a user study involving 18 decision subjects without AI knowledge. We identify their personal metric preferences and their acceptable level of unfairness in individual sessions. Subsequently, we uncovered how they reached metric consensus in team sessions. Our work shows that the EARN Fairness framework enables stakeholders to express personal preferences and reach consensus, providing practical guidance for implementing human-centered AI fairness in high-risk contexts. Through this approach, we aim to harmonize fairness expectations of diverse stakeholders, fostering more equitable and inclusive AI fairness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge