Dynamic Relay Selection and Power Allocation for Minimizing Outage Probability: A Hierarchical Reinforcement Learning Approach

Paper and Code

Nov 10, 2020

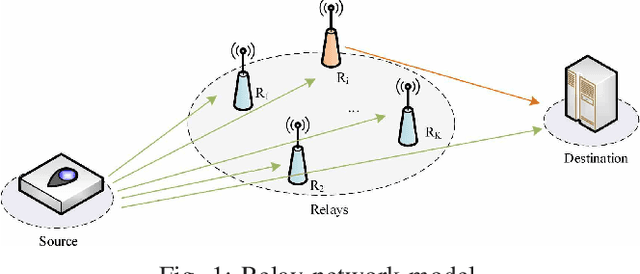

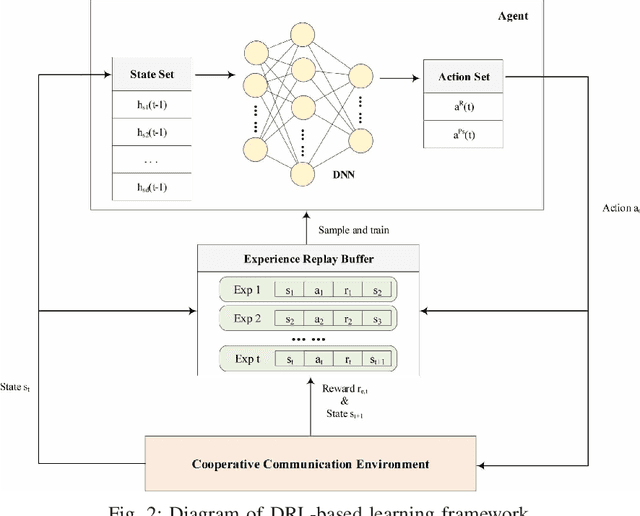

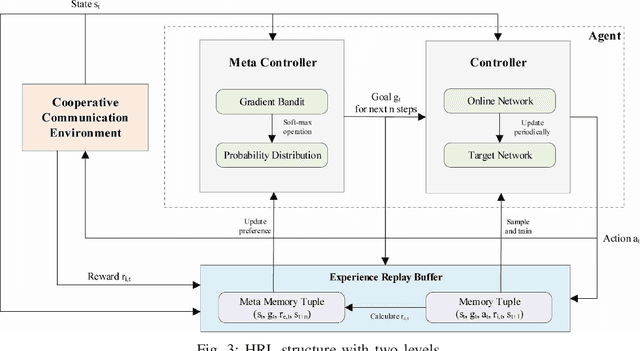

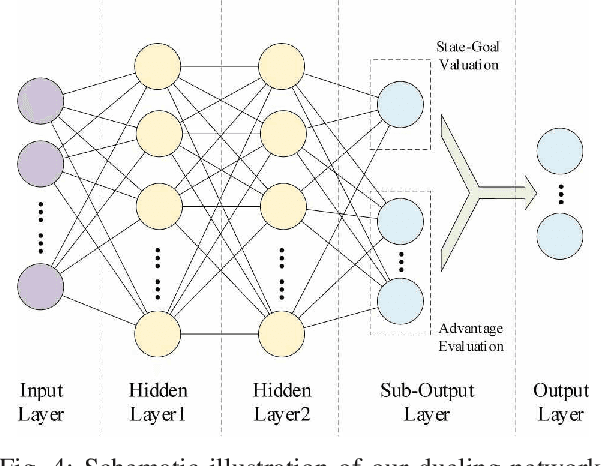

Cooperative communication is an effective approach to improve spectrum utilization. When considering relay selection and power allocation in cooperative communication, most of the existing studies require the assumption of channel state information (CSI). However, it is difficult to get an accurate CSI in practice. In this paper, we consider an outage-based method subjected to a total transmission power constraint in the two-hop cooperative communication scenario. We use reinforcement learning (RL) methods to learn strategies, and complete the optimal relay selection and power allocation, which do not need any prior knowledge of CSI but simply rely on the interaction with the communication environment. It is noted that conventional RL methods, including common deep reinforcement learning (DRL) methods, perform poorly when the search space is large. Therefore, we first propose a practical DRL framework with an outage-based reward function, which is used as a baseline. Then, we further propose our novel hierarchical reinforcement learning (HRL) algorithm for dynamic relay selection and power allocation. A key difference from other RL-based methods in existing literatures is that, our HRL approach decomposes relay selection and power allocation into two hierarchical optimization objectives, which are trained in different levels. Simulation results reveal that our HRL algorithm trains faster and obtains a lower outage probability when compared with traditional DRL methods, especially in a sparse reward environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge