Dynamic Occupancy Grid Mapping with Recurrent Neural Networks

Paper and Code

Nov 17, 2020

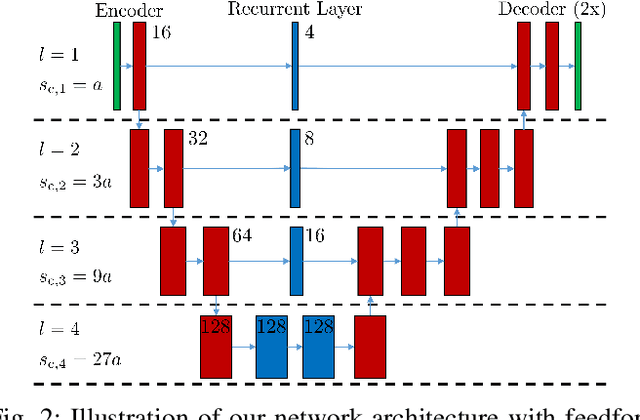

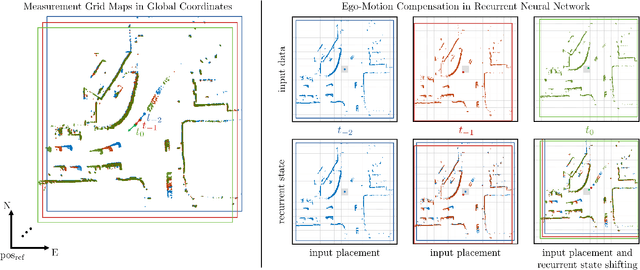

Modeling and understanding the environment is an essential task for autonomous driving. In addition to the detection of objects, in complex traffic scenarios the motion of other road participants is of special interest. Therefore, we propose to use a recurrent neural network to predict a dynamic occupancy grid map, which divides the vehicle surrounding in cells, each containing the occupancy probability and a velocity estimate. During training, our network is fed with sequences of measurement grid maps, which encode the lidar measurements of a single time step. Due to the combination of convolutional and recurrent layers, our approach is capable to use spatial and temporal information for the robust detection of static and dynamic environment. In order to apply our approach with measurements from a moving ego-vehicle, we propose a method for ego-motion compensation that is applicable in neural network architectures with recurrent layers working on different resolutions. In our evaluations, we compare our approach with a state-of-the-art particle-based algorithm on a large publicly available dataset to demonstrate the improved accuracy of velocity estimates and the more robust separation of the environment in static and dynamic area. Additionally, we show that our proposed method for ego-motion compensation leads to comparable results in scenarios with stationary and with moving ego-vehicle.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge