DropSample: A New Training Method to Enhance Deep Convolutional Neural Networks for Large-Scale Unconstrained Handwritten Chinese Character Recognition

Paper and Code

May 20, 2015

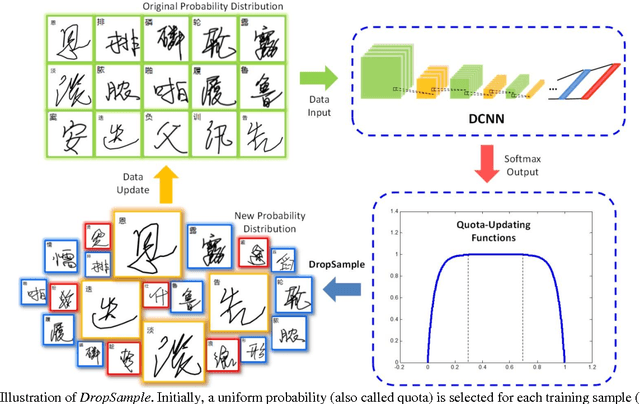

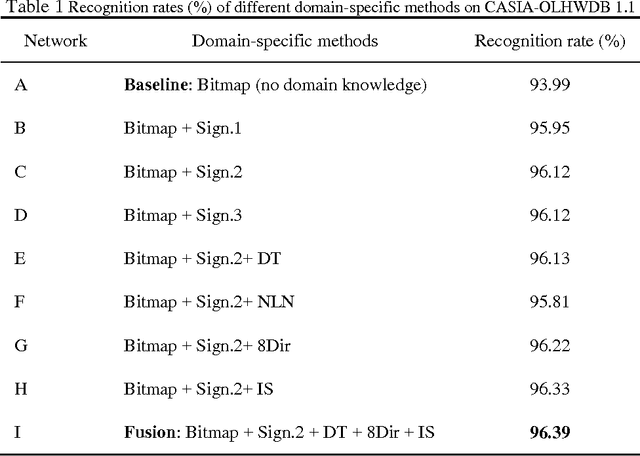

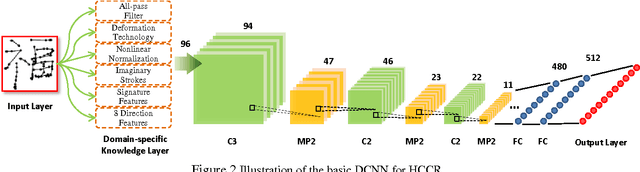

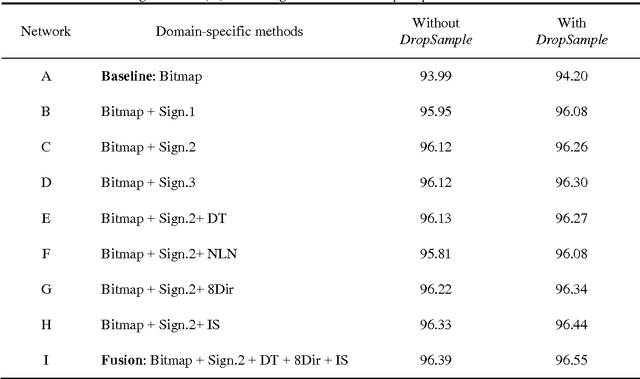

Inspired by the theory of Leitners learning box from the field of psychology, we propose DropSample, a new method for training deep convolutional neural networks (DCNNs), and apply it to large-scale online handwritten Chinese character recognition (HCCR). According to the principle of DropSample, each training sample is associated with a quota function that is dynamically adjusted on the basis of the classification confidence given by the DCNN softmax output. After a learning iteration, samples with low confidence will have a higher probability of being selected as training data in the next iteration; in contrast, well-trained and well-recognized samples with very high confidence will have a lower probability of being involved in the next training iteration and can be gradually eliminated. As a result, the learning process becomes more efficient as it progresses. Furthermore, we investigate the use of domain-specific knowledge to enhance the performance of DCNN by adding a domain knowledge layer before the traditional CNN. By adopting DropSample together with different types of domain-specific knowledge, the accuracy of HCCR can be improved efficiently. Experiments on the CASIA-OLHDWB 1.0, CASIA-OLHWDB 1.1, and ICDAR 2013 online HCCR competition datasets yield outstanding recognition rates of 97.33%, 97.06%, and 97.51% respectively, all of which are significantly better than the previous best results reported in the literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge