Does Thermal Really Always Matter for RGB-T Salient Object Detection?

Paper and Code

Oct 09, 2022

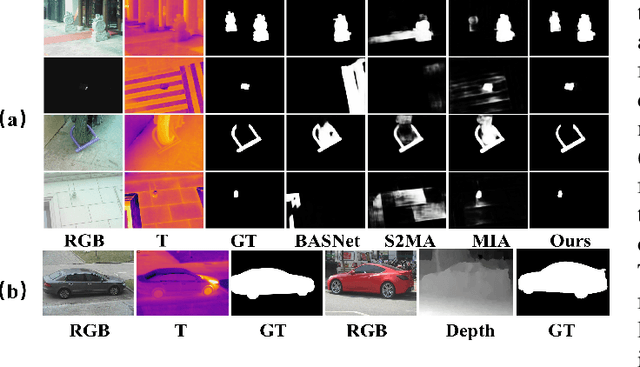

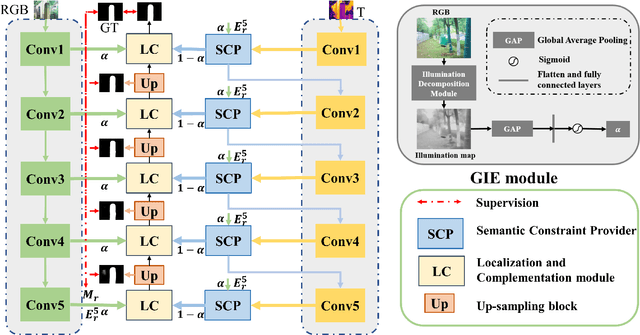

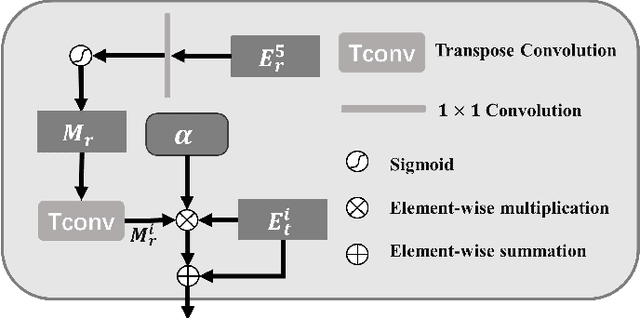

In recent years, RGB-T salient object detection (SOD) has attracted continuous attention, which makes it possible to identify salient objects in environments such as low light by introducing thermal image. However, most of the existing RGB-T SOD models focus on how to perform cross-modality feature fusion, ignoring whether thermal image is really always matter in SOD task. Starting from the definition and nature of this task, this paper rethinks the connotation of thermal modality, and proposes a network named TNet to solve the RGB-T SOD task. In this paper, we introduce a global illumination estimation module to predict the global illuminance score of the image, so as to regulate the role played by the two modalities. In addition, considering the role of thermal modality, we set up different cross-modality interaction mechanisms in the encoding phase and the decoding phase. On the one hand, we introduce a semantic constraint provider to enrich the semantics of thermal images in the encoding phase, which makes thermal modality more suitable for the SOD task. On the other hand, we introduce a two-stage localization and complementation module in the decoding phase to transfer object localization cue and internal integrity cue in thermal features to the RGB modality. Extensive experiments on three datasets show that the proposed TNet achieves competitive performance compared with 20 state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge