Diverse Teacher-Students for Deep Safe Semi-Supervised Learning under Class Mismatch

Paper and Code

May 25, 2024

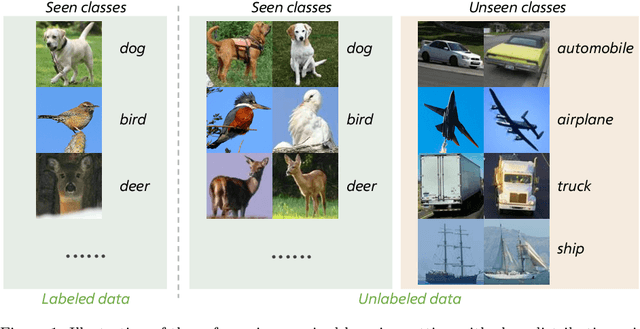

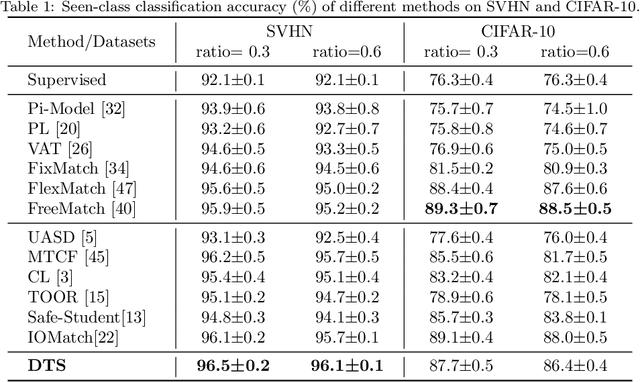

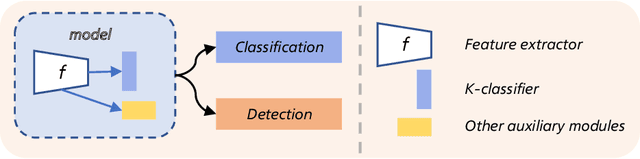

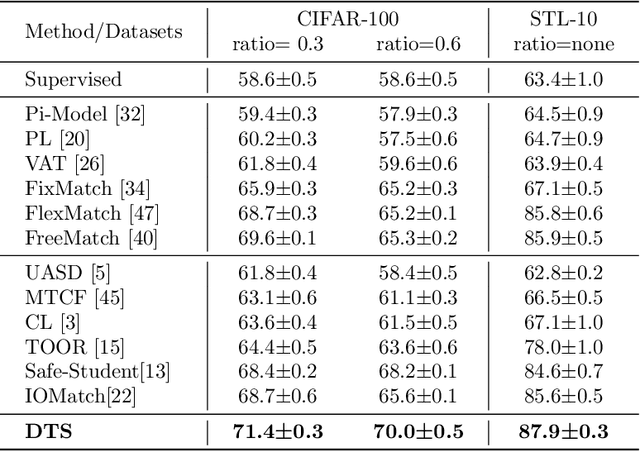

Semi-supervised learning can significantly boost model performance by leveraging unlabeled data, particularly when labeled data is scarce. However, real-world unlabeled data often contain unseen-class samples, which can hinder the classification of seen classes. To address this issue, mainstream safe SSL methods suggest detecting and discarding unseen-class samples from unlabeled data. Nevertheless, these methods typically employ a single-model strategy to simultaneously tackle both the classification of seen classes and the detection of unseen classes. Our research indicates that such an approach may lead to conflicts during training, resulting in suboptimal model optimization. Inspired by this, we introduce a novel framework named Diverse Teacher-Students (\textbf{DTS}), which uniquely utilizes dual teacher-student models to individually and effectively handle these two tasks. DTS employs a novel uncertainty score to softly separate unseen-class and seen-class data from the unlabeled set, and intelligently creates an additional ($K$+1)-th class supervisory signal for training. By training both teacher-student models with all unlabeled samples, DTS can enhance the classification of seen classes while simultaneously improving the detection of unseen classes. Comprehensive experiments demonstrate that DTS surpasses baseline methods across a variety of datasets and configurations. Our code and models can be publicly accessible on the link https://github.com/Zhanlo/DTS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge