Distributed Reinforcement Learning for Robot Teams: A Review

Paper and Code

Apr 07, 2022

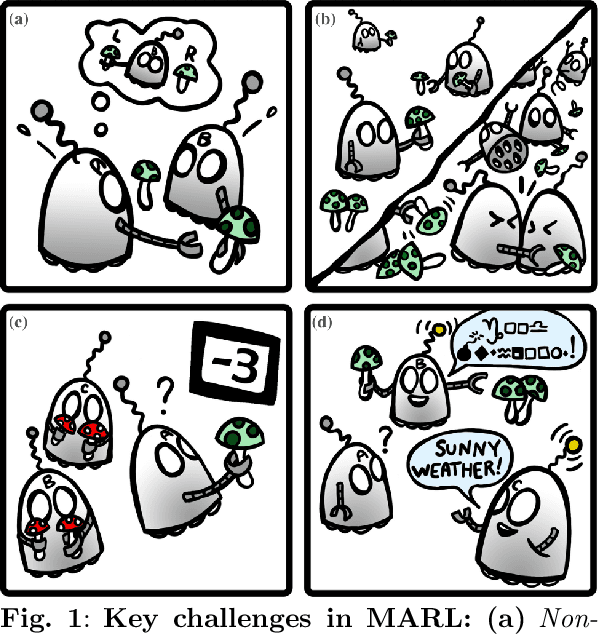

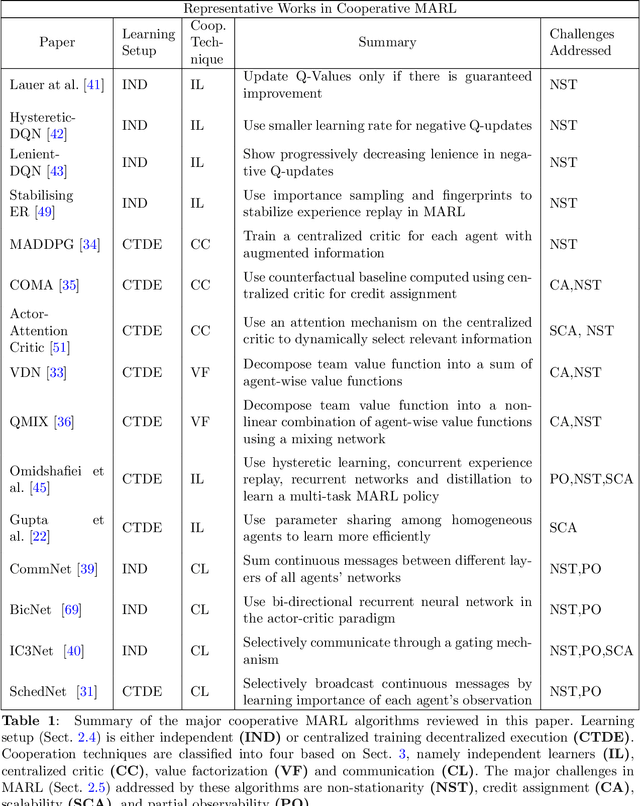

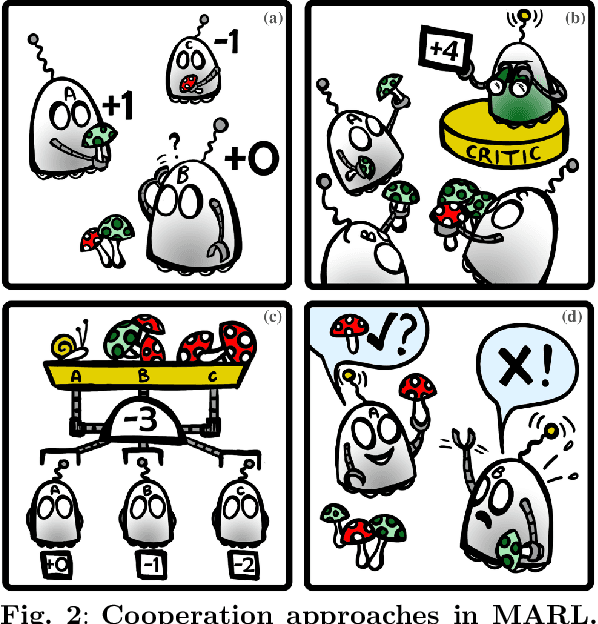

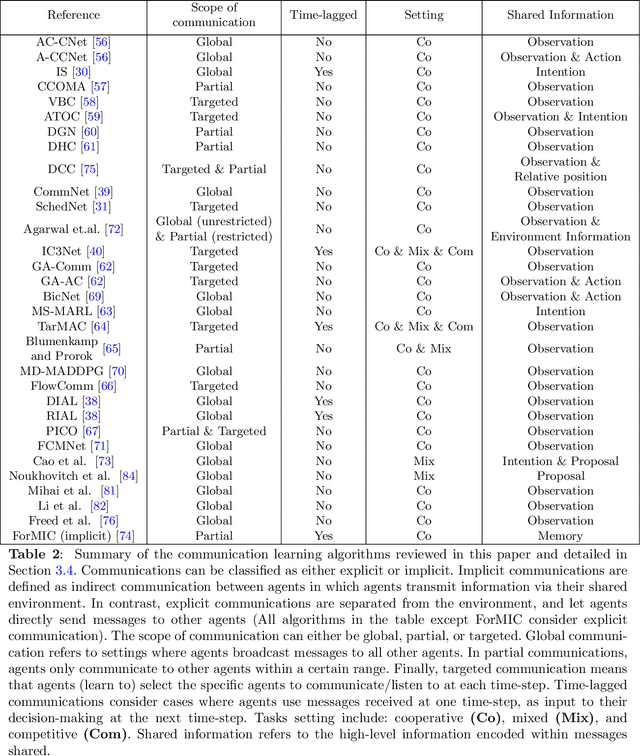

Purpose of review: Recent advances in sensing, actuation, and computation have opened the door to multi-robot systems consisting of hundreds/thousands of robots, with promising applications to automated manufacturing, disaster relief, harvesting, last-mile delivery, port/airport operations, or search and rescue. The community has leveraged model-free multi-agent reinforcement learning (MARL) to devise efficient, scalable controllers for multi-robot systems (MRS). This review aims to provide an analysis of the state-of-the-art in distributed MARL for multi-robot cooperation. Recent findings: Decentralized MRS face fundamental challenges, such as non-stationarity and partial observability. Building upon the "centralized training, decentralized execution" paradigm, recent MARL approaches include independent learning, centralized critic, value decomposition, and communication learning approaches. Cooperative behaviors are demonstrated through AI benchmarks and fundamental real-world robotic capabilities such as multi-robot motion/path planning. Summary: This survey reports the challenges surrounding decentralized model-free MARL for multi-robot cooperation and existing classes of approaches. We present benchmarks and robotic applications along with a discussion on current open avenues for research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge