Distant Supervision for Relation Extraction with Linear Attenuation Simulation and Non-IID Relevance Embedding

Paper and Code

Dec 22, 2018

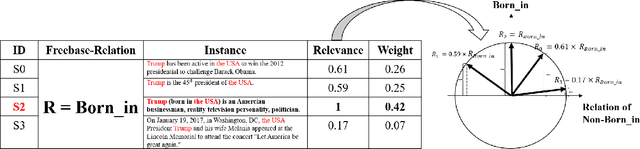

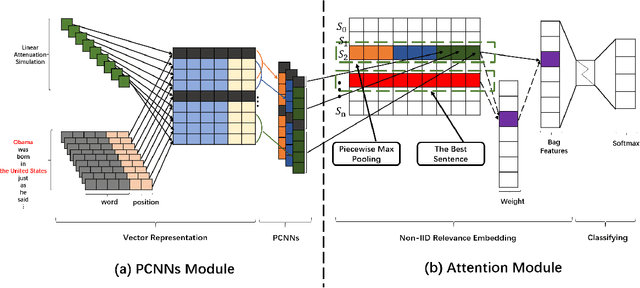

Distant supervision for relation extraction is an efficient method to reduce labor costs and has been widely used to seek novel relational facts in large corpora, which can be identified as a multi-instance multi-label problem. However, existing distant supervision methods suffer from selecting important words in the sentence and extracting valid sentences in the bag. Towards this end, we propose a novel approach to address these problems in this paper. Firstly, we propose a linear attenuation simulation to reflect the importance of words in the sentence with respect to the distances between entities and words. Secondly, we propose a non-independent and identically distributed (non-IID) relevance embedding to capture the relevance of sentences in the bag. Our method can not only capture complex information of words about hidden relations, but also express the mutual information of instances in the bag. Extensive experiments on a benchmark dataset have well-validated the effectiveness of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge