Dissociable neural representations of adversarially perturbed images in deep neural networks and the human brain

Paper and Code

Dec 22, 2018

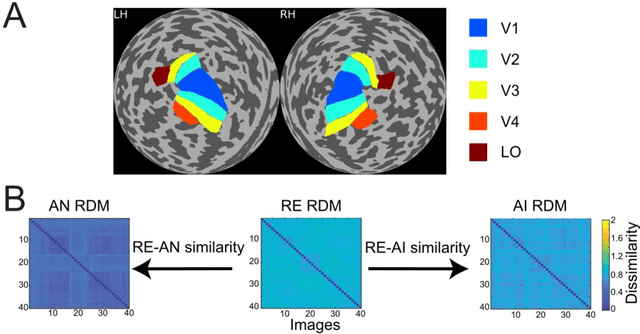

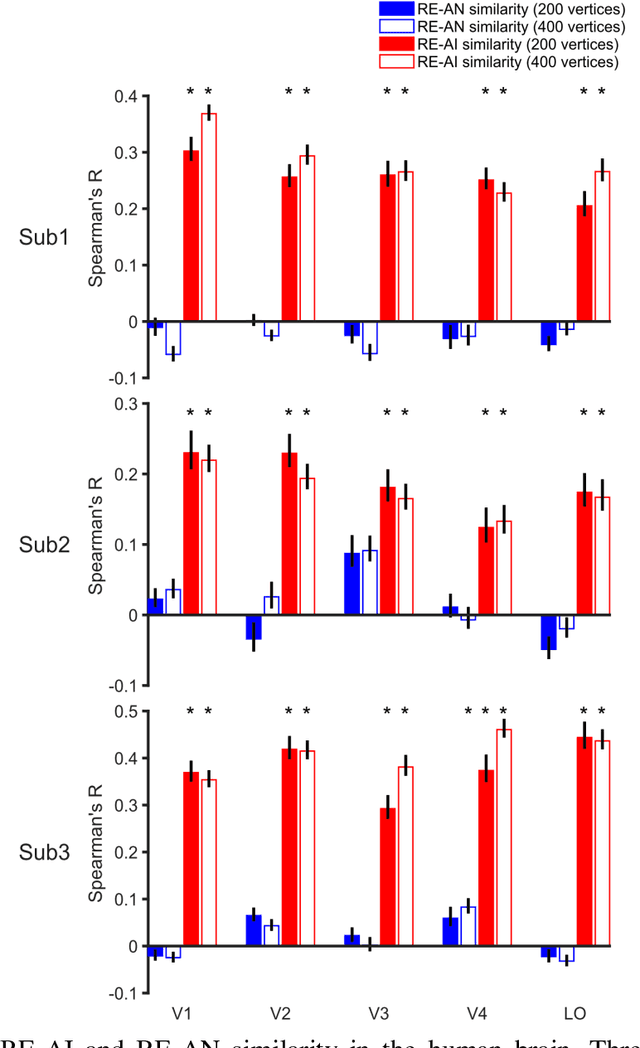

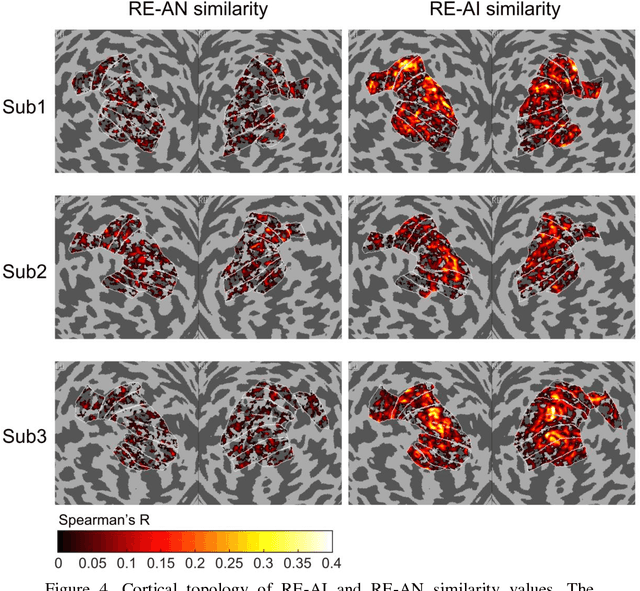

Despite the remarkable similarities between deep neural networks (DNN) and the human brain as shown in previous studies, the fact that DNNs still fall behind humans in many visual tasks suggests that considerable differences still exist between the two systems. To probe their dissimilarities, we leverage adversarial noise (AN) and adversarial interference (AI) images that yield distinct recognition performance in a prototypical DNN (AlexNet) and human vision. The evoked activity by regular (RE) and adversarial images in both systems is thoroughly compared. We find that representational similarity between RE and adversarial images in the human brain resembles their perceptual similarity. However, such representation-perception association is disrupted in the DNN. Especially, the representational similarity between RE and AN images idiosyncratically increases from low- to high-level layers. Furthermore, forward encoding modeling reveals that the DNN-brain hierarchical correspondence proposed in previous studies only holds when the two systems process RE and AI images but not AN images. These results might be due to the deterministic modeling approach of current DNNs. Taken together, our results provide a complementary perspective on the comparison between DNNs and the human brain, and highlight the need to characterize their differences to further bridge artificial and human intelligence research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge