Discovering Intrinsic Reward with Contrastive Random Walk

Paper and Code

Apr 23, 2022

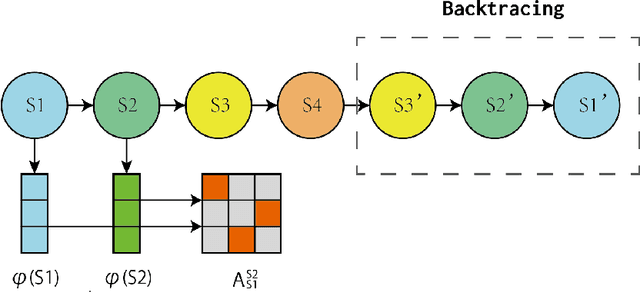

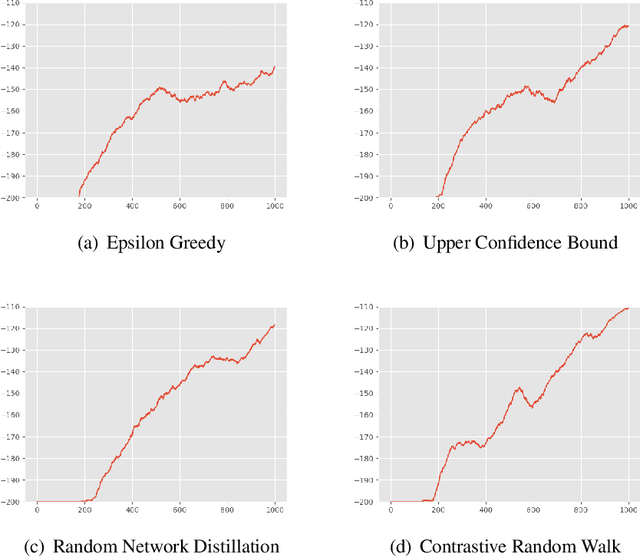

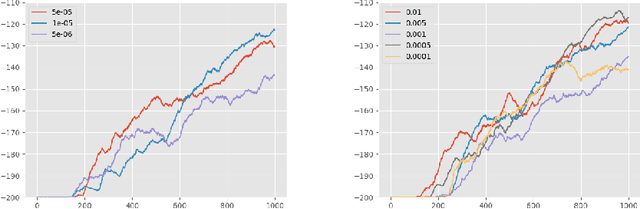

The aim of this paper is to demonstrate the efficacy of using Contrastive Random Walk as a curiosity method to achieve faster convergence to the optimal policy.Contrastive Random Walk defines the transition matrix of a random walk with the help of neural networks. It learns a meaningful state representation with a closed loop. The loss of Contrastive Random Walk serves as an intrinsic reward and is added to the environment reward. Our method works well in non-tabular sparse reward scenarios, in the sense that our method receives the highest reward within the same iterations compared to other methods. Meanwhile, Contrastive Random Walk is more robust. The performance doesn't change much with different random initialization of environments. We also find that adaptive restart and appropriate temperature are crucial to the performance of Contrastive Random Walk.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge