Direct Optimization through $\arg \max$ for Discrete Variational Auto-Encoder

Paper and Code

Oct 11, 2018

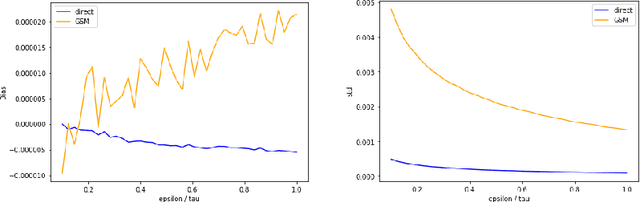

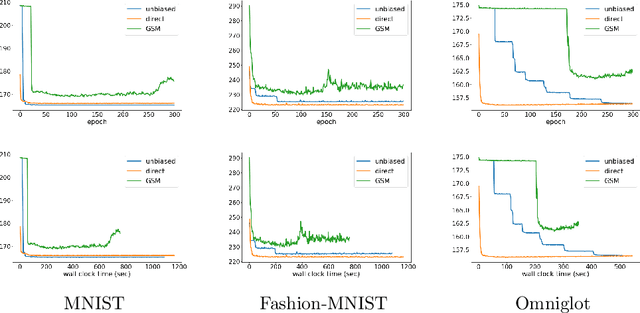

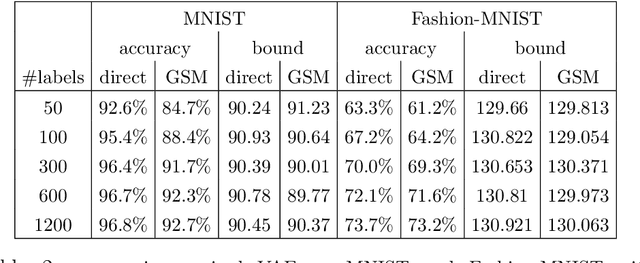

Reparameterization of variational auto-encoders with continuous latent spaces is an effective method for reducing the variance of their gradient estimates. However, using the same approach when latent variables are discrete is problematic, due to the resulting non-differentiable objective. In this work, we present a direct optimization method that propagates gradients through a non-differentiable $\arg \max$ prediction operation. We apply this method to discrete variational auto-encoders, by modeling a discrete random variable by the $\arg \max$ function of the Gumbel-Max perturbation model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge