DIME-Net: Neural Network-Based Dynamic Intrinsic Parameter Rectification for Cameras with Optical Image Stabilization System

Paper and Code

Mar 20, 2023

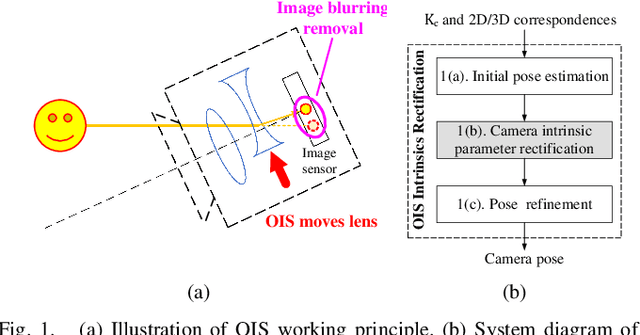

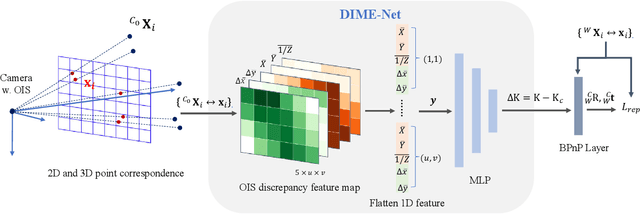

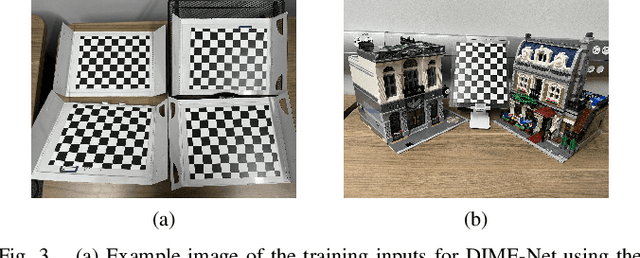

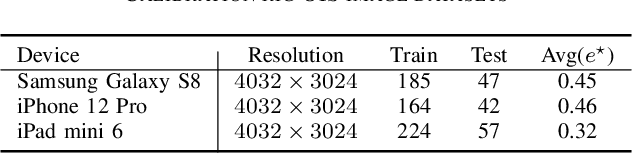

Optical Image Stabilization (OIS) system in mobile devices reduces image blurring by steering lens to compensate for hand jitters. However, OIS changes intrinsic camera parameters (i.e. $\mathrm{K}$ matrix) dynamically which hinders accurate camera pose estimation or 3D reconstruction. Here we propose a novel neural network-based approach that estimates $\mathrm{K}$ matrix in real-time so that pose estimation or scene reconstruction can be run at camera native resolution for the highest accuracy on mobile devices. Our network design takes gratified projection model discrepancy feature and 3D point positions as inputs and employs a Multi-Layer Perceptron (MLP) to approximate $f_{\mathrm{K}}$ manifold. We also design a unique training scheme for this network by introducing a Back propagated PnP (BPnP) layer so that reprojection error can be adopted as the loss function. The training process utilizes precise calibration patterns for capturing accurate $f_{\mathrm{K}}$ manifold but the trained network can be used anywhere. We name the proposed Dynamic Intrinsic Manifold Estimation network as DIME-Net and have it implemented and tested on three different mobile devices. In all cases, DIME-Net can reduce reprojection error by at least $64\%$ indicating that our design is successful.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge