Diffusion Variational Autoencoders

Paper and Code

May 29, 2019

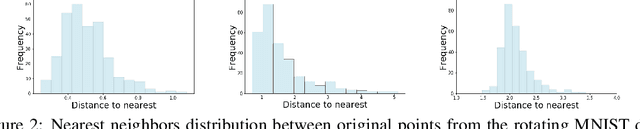

Variational autoencoders (VAEs) have become one of the most popular deep learning approaches to unsupervised learning and data generation. However, traditional VAEs suffer from the constraint that the latent space must distributionally match a simple prior (e.g. normal, uniform), independent of the initial data distribution. This leads to a number of issues around modeling manifold data, as there is no function with bounded Jacobian that maps a normal distribution to certain manifolds (e.g. sphere). Similarly, there are not many theoretical guarantees on the encoder and decoder created by the VAE. In this work, we propose a variational autoencoder that maps manifold valued data to its diffusion map coordinates in the latent space, resamples in a neighborhood around a given point in the latent space, and learns a decoder that maps the newly resampled points back to the manifold. The framework is built off of SpectralNet, and is capable of learning this data dependent latent space without computing the eigenfunction of the Laplacian explicitly. We prove that the diffusion variational autoencoder framework is capable of learning a locally bi-Lipschitz map between the manifold and the latent space, and that our resampling method around a point in the latent space $\psi(x)$ maps points back to the manifold around the point $x$, specifically into a neighborbood on the tangent space at the point $x$ on the manifold. We also provide empirical evidence of the benefits of using a diffusion map latent space on manifold data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge