Differentially Private Optimization with Sparse Gradients

Paper and Code

Apr 16, 2024

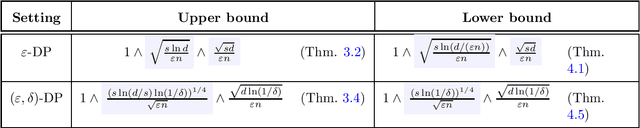

Motivated by applications of large embedding models, we study differentially private (DP) optimization problems under sparsity of individual gradients. We start with new near-optimal bounds for the classic mean estimation problem but with sparse data, improving upon existing algorithms particularly for the high-dimensional regime. Building on this, we obtain pure- and approximate-DP algorithms with almost optimal rates for stochastic convex optimization with sparse gradients; the former represents the first nearly dimension-independent rates for this problem. Finally, we study the approximation of stationary points for the empirical loss in approximate-DP optimization and obtain rates that depend on sparsity instead of dimension, modulo polylogarithmic factors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge