Differentiable Bilevel Programming for Stackelberg Congestion Games

Paper and Code

Sep 15, 2022

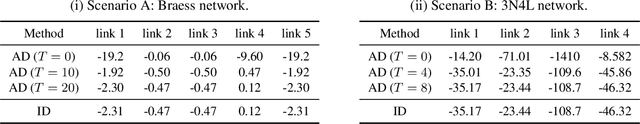

A Stackelberg congestion game (SCG) is a bilevel program in which a leader aims to maximize their own gain by anticipating and manipulating the equilibrium state at which followers settle by playing a congestion game. Large-scale SCGs are well known for their intractability and complexity. This study approaches SCGs through differentiable programming, which marries the latest developments in machine learning with conventional methodologies. The core idea centers on representing the lower-level equilibrium problem using an evolution path formed by the imitative logit dynamics. It enables the use of automatic differentiation over the evolution path towards equilibrium, leading to a double-loop gradient descent algorithm. We further show the fixation on the lower-level equilibrium may be a self-imposed computational obstacle. Instead, the leader may only look ahead along the followers' evolution path for a few steps, while updating their decisions in sync with the followers through a co-evolution process. The revelation gives rise to a single-loop algorithm that is more efficient in terms of both memory consumption and computation time. Through numerical experiments that cover a wide range of benchmark problems, we find the single-loop algorithm consistently strikes a good balance between solution quality and efficiency, outperforming not only the standard double-loop implementation but also other methods from the literature. Importantly, our results highlight both the wastefulness of "full anticipation" and the peril of "zero anticipation". If a quick-and-dirty heuristic is needed for solving a really large SCG, the proposed single-loop algorithm with a one-step look-ahead makes an ideal candidate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge