Differentiable and Transportable Structure Learning

Paper and Code

Jun 13, 2022

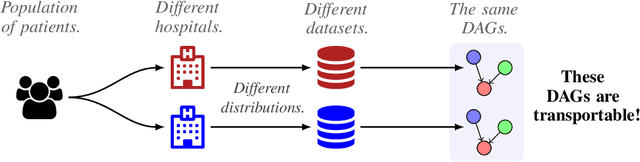

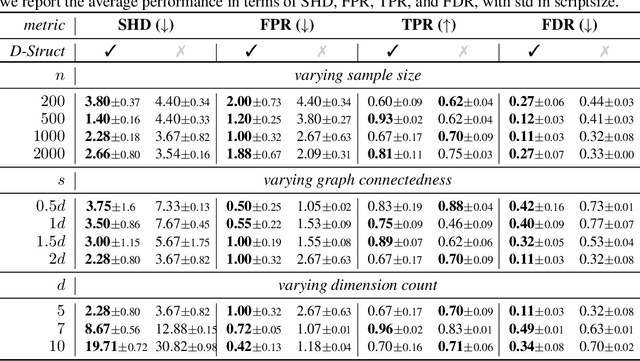

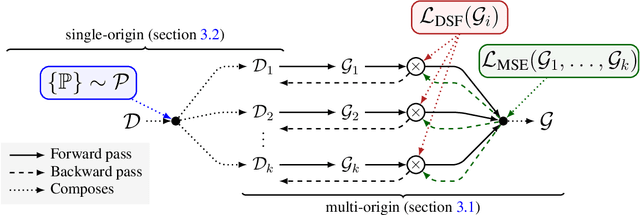

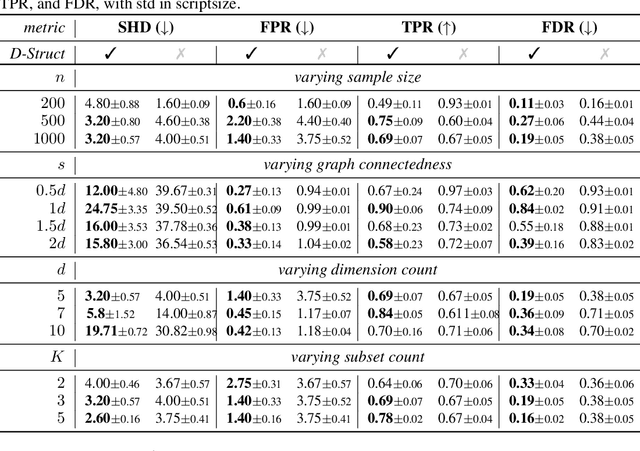

We are interested in unsupervised structure learning with a particular focus on directed acyclic graphical (DAG) models. Compute required to infer these structures is typically super-exponential in the amount of variables, as inference requires a sweep of a combinatorially large space of potential structures. That is, until recent advances allowed to search this space using a differentiable metric, drastically reducing search time. While this technique -- named NOTEARS -- is widely considered a seminal work in DAG-discovery, it concedes an important property in favour of differentiability: transportability. In our paper we introduce D-Struct which recovers transportability in the found structures through a novel architecture and loss function, while remaining completely differentiable. As D-Struct remains differentiable, one can easily adopt our method in differentiable architectures as was previously done with NOTEARS. In our experiments we empirically validate D-Struct with respect to edge accuracy and the structural Hamming distance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge