DICTDIS: Dictionary Constrained Disambiguation for Improved NMT

Paper and Code

Oct 13, 2022

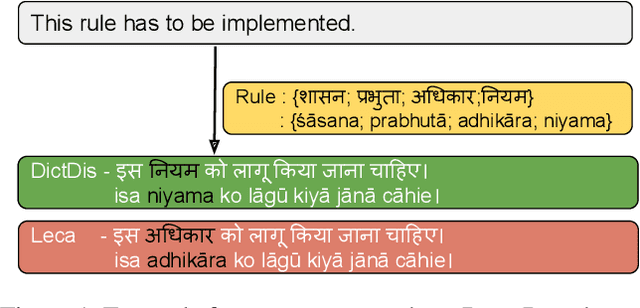

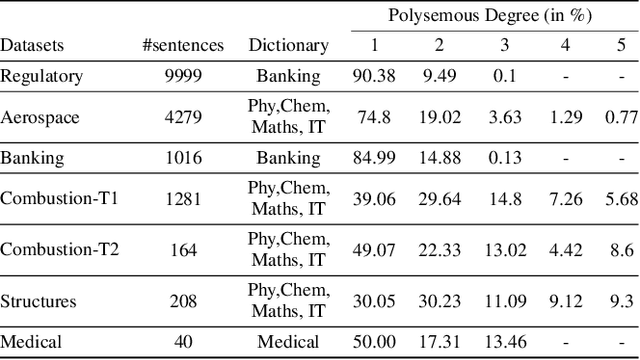

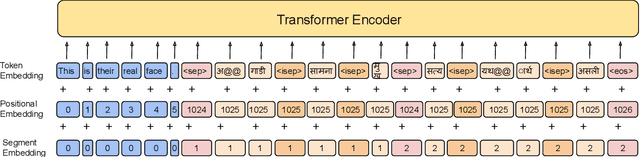

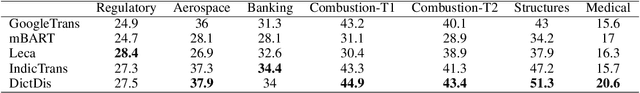

Domain-specific neural machine translation (NMT) systems (e.g., in educational applications) are socially significant with the potential to help make information accessible to a diverse set of users in multilingual societies. It is desirable that such NMT systems be lexically constrained and draw from domain-specific dictionaries. Dictionaries could present multiple candidate translations for a source words/phrases on account of the polysemous nature of words. The onus is then on the NMT model to choose the contextually most appropriate candidate. Prior work has largely ignored this problem and focused on the single candidate setting where the target word or phrase is replaced by a single constraint. In this work we present DICTDIS, a lexically constrained NMT system that disambiguates between multiple candidate translations derived from dictionaries. We achieve this by augmenting training data with multiple dictionary candidates to actively encourage disambiguation during training. We demonstrate the utility of DICTDIS via extensive experiments on English-Hindi sentences in a variety of domains including news, finance, medicine and engineering. We obtain superior disambiguation performance on all domains with improved fluency in some domains of up to 4 BLEU points, when compared with existing approaches for lexically constrained and unconstrained NMT.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge