Dialog+ in Broadcasting: First Field Tests Using Deep-Learning-Based Dialogue Enhancement

Paper and Code

Dec 17, 2021

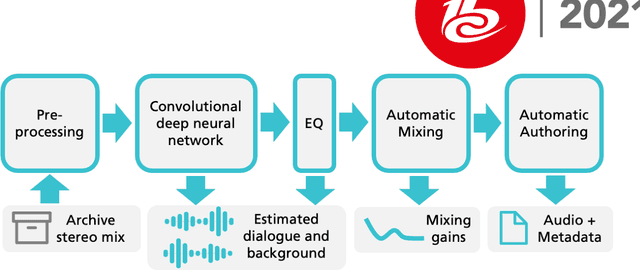

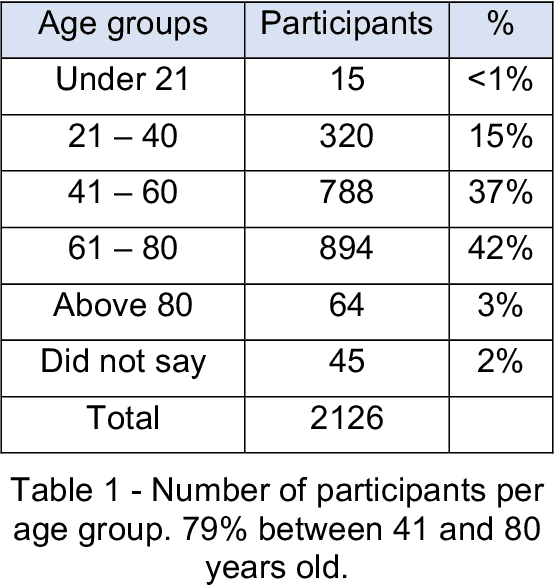

Difficulties in following speech due to loud background sounds are common in broadcasting. Object-based audio, e.g., MPEG-H Audio solves this problem by providing a user-adjustable speech level. While object-based audio is gaining momentum, transitioning to it requires time and effort. Also, lots of content exists, produced and archived outside the object-based workflows. To address this, Fraunhofer IIS has developed a deep-learning solution called Dialog+, capable of enabling speech level personalization also for content with only the final audio tracks available. This paper reports on public field tests evaluating Dialog+, conducted together with Westdeutscher Rundfunk (WDR) and Bayerischer Rundfunk (BR), starting from September 2020. To our knowledge, these are the first large-scale tests of this kind. As part of one of these, a survey with more than 2,000 participants showed that 90% of the people above 60 years old have problems in understanding speech in TV "often" or "very often". Overall, 83% of the participants liked the possibility to switch to Dialog+, including those who do not normally struggle with speech intelligibility. Dialog+ introduces a clear benefit for the audience, filling the gap between object-based broadcasting and traditionally produced material.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge