Diagnosing AI Explanation Methods with Folk Concepts of Behavior

Paper and Code

Jan 27, 2022

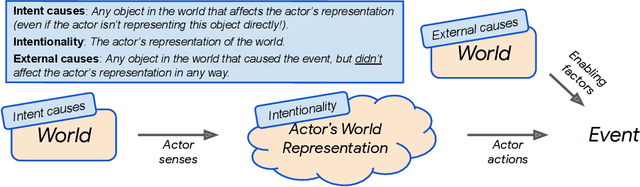

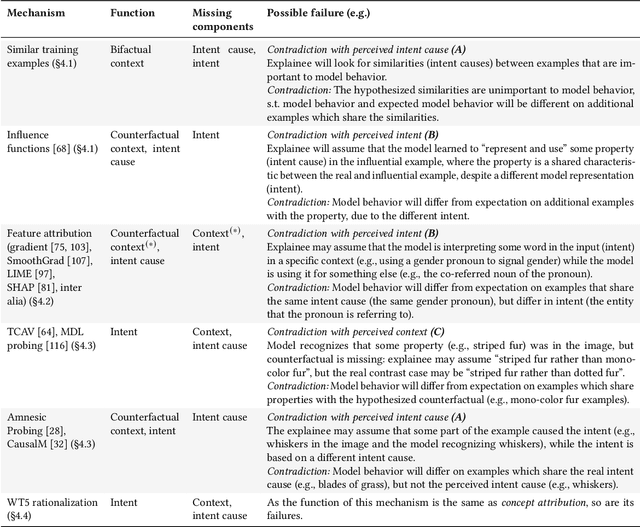

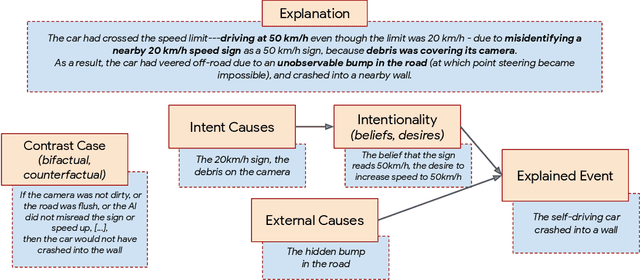

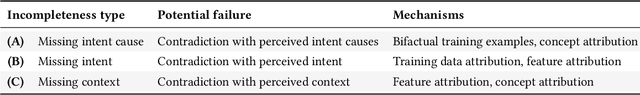

When explaining AI behavior to humans, how is the communicated information being comprehended by the human explainee, and does it match what the explanation attempted to communicate? When can we say that an explanation is explaining something? We aim to provide an answer by leveraging theory of mind literature about the folk concepts that humans use to understand behavior. We establish a framework of social attribution by the human explainee, which describes the function of explanations: the concrete information that humans comprehend from them. Specifically, effective explanations should be coherent (communicate information which generalizes to other contrast cases), complete (communicating an explicit contrast case, objective causes, and subjective causes), and interactive (surfacing and resolving contradictions to the generalization property through iterations). We demonstrate that many XAI mechanisms can be mapped to folk concepts of behavior. This allows us to uncover their modes of failure that prevent current methods from explaining effectively, and what is necessary to enable coherent explanations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge