DGNN-YOLO: Interpretable Dynamic Graph Neural Networks with YOLO11 for Small Object Detection and Tracking in Traffic Surveillance

Paper and Code

Dec 11, 2024

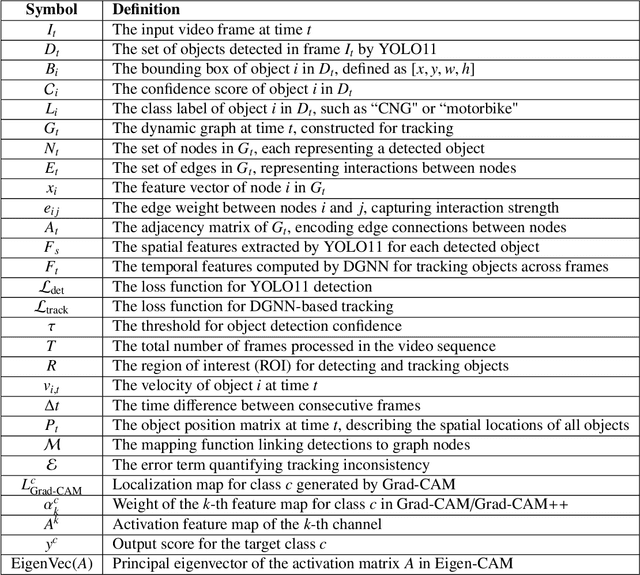

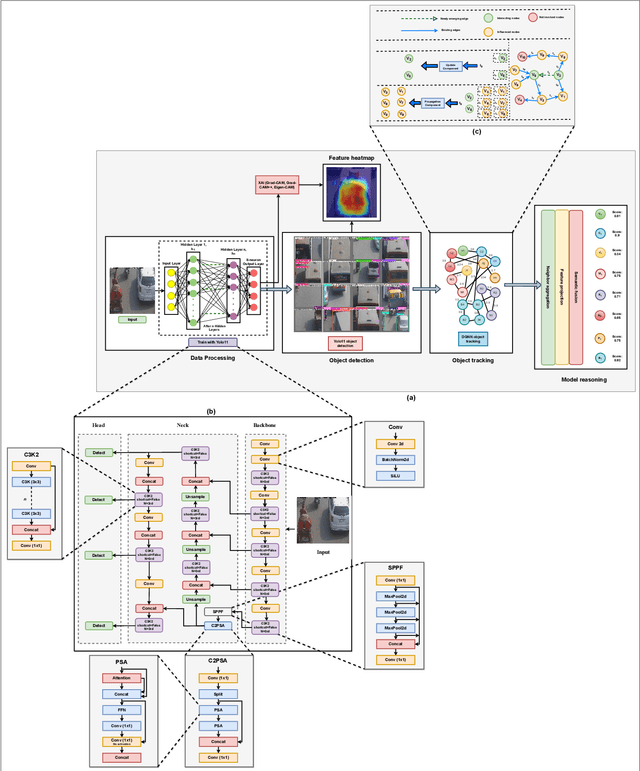

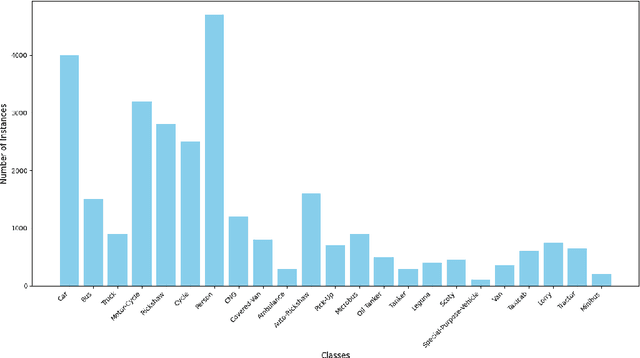

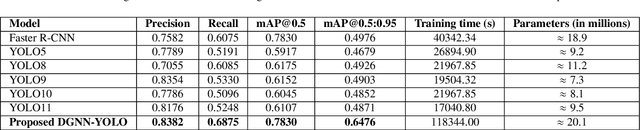

Accurate detection and tracking of small objects, such as pedestrians, cyclists, and motorbikes, is critical for traffic surveillance systems, which are crucial for improving road safety and decision-making in intelligent transportation systems. However, traditional methods face challenges such as occlusion, low resolution, and dynamic traffic conditions, necessitating innovative approaches to address these limitations. This paper introduces DGNN-YOLO, a novel framework integrating dynamic graph neural networks (DGNN) with YOLO11 to enhance small-object detection and tracking in traffic surveillance systems. The framework leverages YOLO11's advanced spatial feature extraction capabilities for precise object detection and incorporates a DGNN to model spatial-temporal relationships for robust real-time tracking dynamically. By constructing and updating graph structures, DGNN-YOLO effectively represents objects as nodes and their interactions as edges, thereby ensuring adaptive and accurate tracking in complex and dynamic environments. Additionally, Grad-CAM, Grad-CAM++, and Eigen-CAM visualization techniques were applied to DGNN-YOLO to provide model-agnostic interpretability and deeper insights into the model's decision-making process, enhancing its transparency and trustworthiness. Extensive experiments demonstrated that DGNN-YOLO consistently outperformed state-of-the-art methods in detecting and tracking small objects under diverse traffic conditions, achieving the highest precision (0.8382), recall (0.6875), and mAP@0.5:0.95 (0.6476), showing its robustness and scalability, particularly in challenging scenarios involving small and occluded objects. This study provides a scalable, real-time traffic surveillance and analysis solution, significantly contributing to intelligent transportation systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge