Detecting and Correcting Adversarial Images Using Image Processing Operations

Paper and Code

Dec 30, 2019

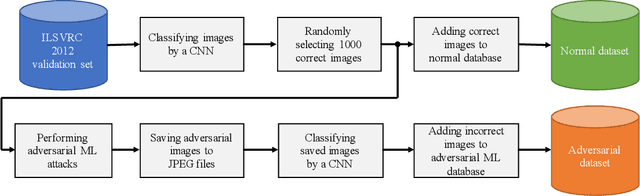

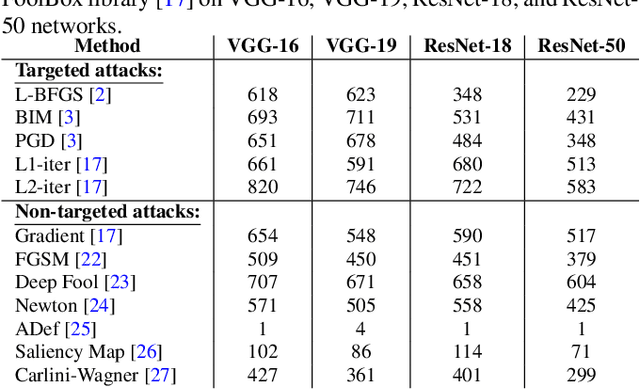

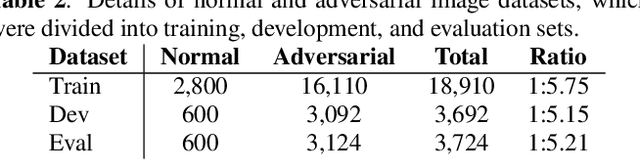

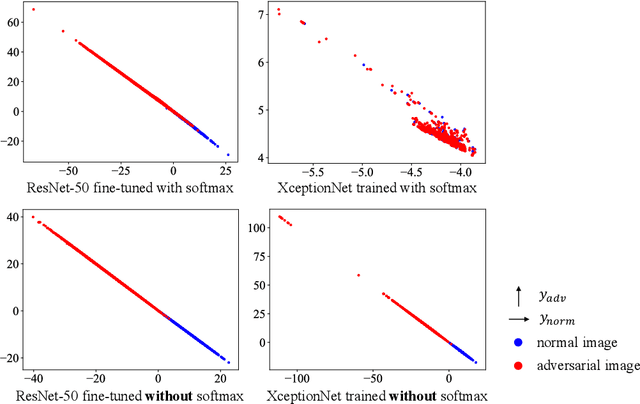

Deep neural networks (DNNs) have achieved excellent performance on several tasks and have been widely applied in both academia and industry. However, DNNs are vulnerable to adversarial machine learning attacks, in which noise is added to the input to change the network output. We have devised an image-processing-based method to detect adversarial images based on our observation that adversarial noise is reduced after applying these operations while the normal images almost remain unaffected. In addition to detection, this method can be used to restore the adversarial images' original labels, which is crucial to restoring the normal functionalities of DNN-based systems. Testing using an adversarial machine learning database we created for generating several types of attack using images from the ImageNet Large Scale Visual Recognition Challenge database demonstrated the efficiency of our proposed method for both detection and correction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge