Demystifying Pseudo-LiDAR for Monocular 3D Object Detection

Paper and Code

Dec 10, 2020

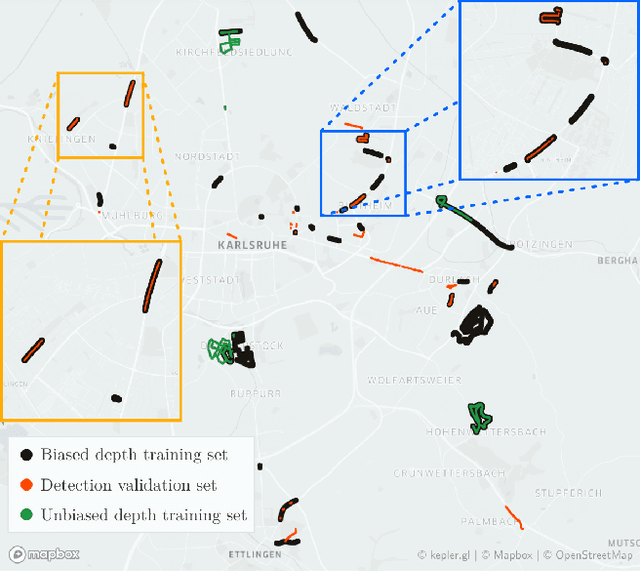

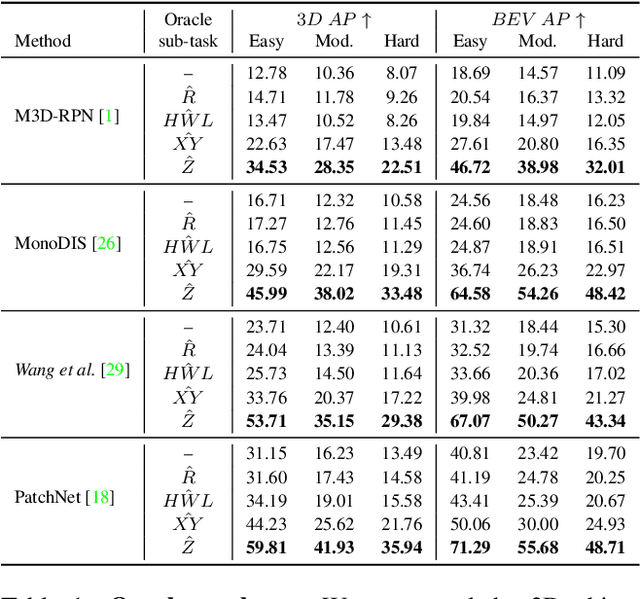

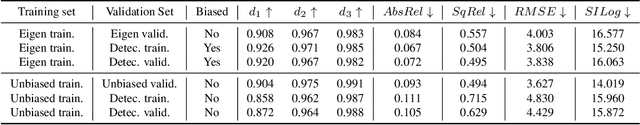

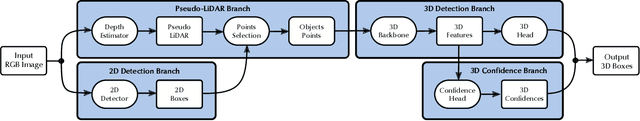

Pseudo-LiDAR-based methods for monocular 3D object detection have generated large attention in the community due to performance gains showed on the KITTI3D benchmark dataset, in particular on the commonly reported validation split. This generated a distorted impression about the superiority of Pseudo-LiDAR approaches against methods working with RGB-images only. Our first contribution consists in rectifying this view by analysing and showing experimentally that the validation results published by Pseudo-LiDAR-based methods are substantially biased. The source of the bias resides in an overlap between the KITTI3D object detection validation set and the training/validation sets used to train depth predictors feeding Pseudo-LiDAR-based methods. Surprisingly, the bias remains also after geographically removing the overlap, revealing the presence of a more structured contamination. This leaves the test set as the only reliable mean of comparison, where published Pseudo-LiDAR-based methods do not excel. Our second contribution brings Pseudo-LiDAR-based methods back up in the ranking with the introduction of a 3D confidence prediction module. Thanks to the proposed architectural changes, our modified Pseudo-LiDAR-based methods exhibit extraordinary gains on the test scores (up to +8% 3D AP).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge