Demystifying CNNs for Images by Matched Filters

Paper and Code

Oct 16, 2022

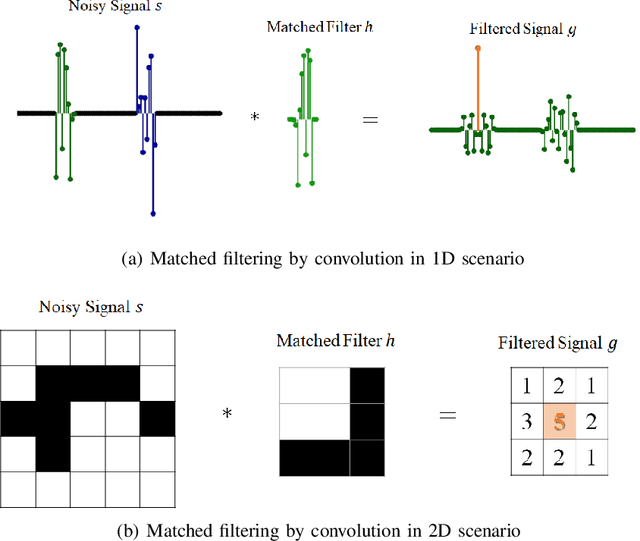

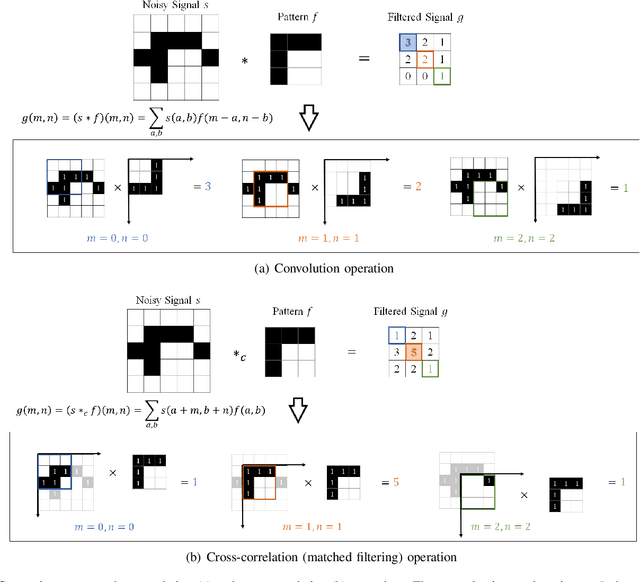

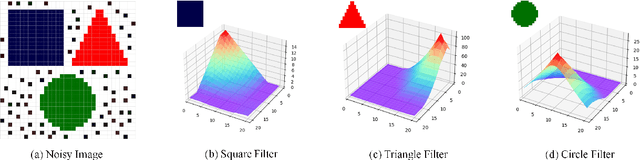

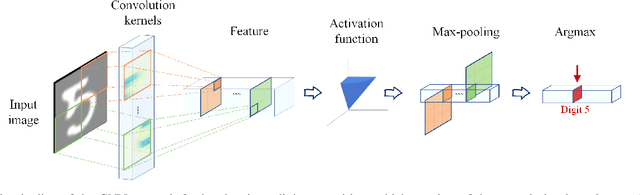

The success of convolution neural networks (CNN) has been revolutionising the way we approach and use intelligent machines in the Big Data era. Despite success, CNNs have been consistently put under scrutiny owing to their \textit{black-box} nature, an \textit{ad hoc} manner of their construction, together with the lack of theoretical support and physical meanings of their operation. This has been prohibitive to both the quantitative and qualitative understanding of CNNs, and their application in more sensitive areas such as AI for health. We set out to address these issues, and in this way demystify the operation of CNNs, by employing the perspective of matched filtering. We first illuminate that the convolution operation, the very core of CNNs, represents a matched filter which aims to identify the presence of features in input data. This then serves as a vehicle to interpret the convolution-activation-pooling chain in CNNs under the theoretical umbrella of matched filtering, a common operation in signal processing. We further provide extensive examples and experiments to illustrate this connection, whereby the learning in CNNs is shown to also perform matched filtering, which further sheds light onto physical meaning of learnt parameters and layers. It is our hope that this material will provide new insights into the understanding, constructing and analysing of CNNs, as well as paving the way for developing new methods and architectures of CNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge