DeepMMSA: A Novel Multimodal Deep Learning Method for Non-small Cell Lung Cancer Survival Analysis

Paper and Code

Jun 12, 2021

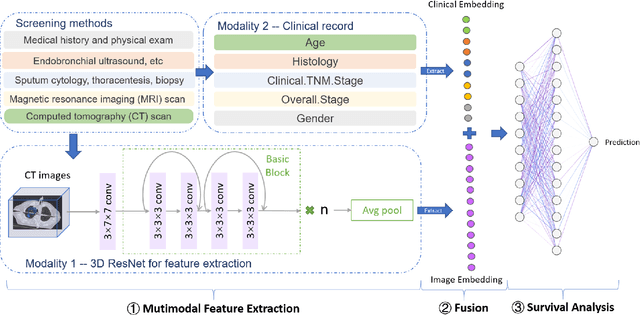

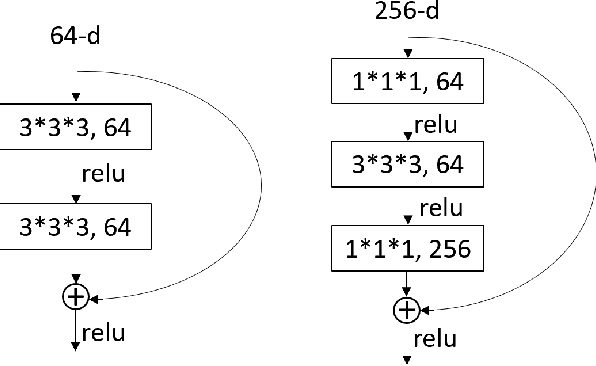

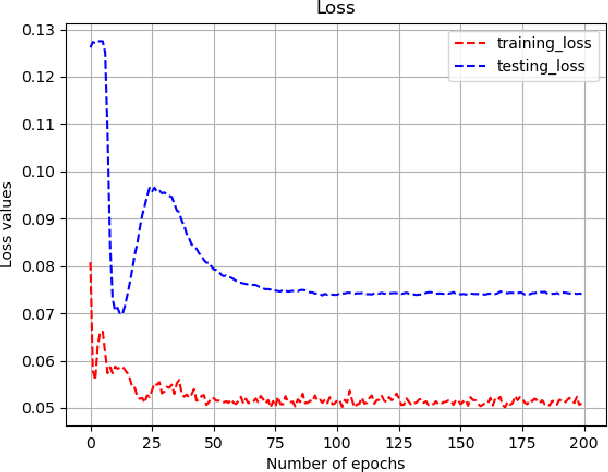

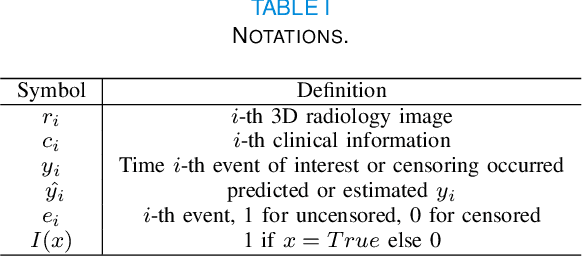

Lung cancer is the leading cause of cancer death worldwide. The critical reason for the deaths is delayed diagnosis and poor prognosis. With the accelerated development of deep learning techniques, it has been successfully applied extensively in many real-world applications, including health sectors such as medical image interpretation and disease diagnosis. By combining more modalities that being engaged in the processing of information, multimodal learning can extract better features and improve predictive ability. The conventional methods for lung cancer survival analysis normally utilize clinical data and only provide a statistical probability. To improve the survival prediction accuracy and help prognostic decision-making in clinical practice for medical experts, we for the first time propose a multimodal deep learning method for non-small cell lung cancer (NSCLC) survival analysis, named DeepMMSA. This method leverages CT images in combination with clinical data, enabling the abundant information hold within medical images to be associate with lung cancer survival information. We validate our method on the data of 422 NSCLC patients from The Cancer Imaging Archive (TCIA). Experimental results support our hypothesis that there is an underlying relationship between prognostic information and radiomic images. Besides, quantitative results showing that the established multimodal model can be applied to traditional method and has the potential to break bottleneck of existing methods and increase the the percentage of concordant pairs(right predicted pairs) in overall population by 4%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge