DeepBlindness: Fast Blindness Map Estimation and Blindness Type Classification for Outdoor Scene from Single Color Image

Paper and Code

Nov 02, 2019

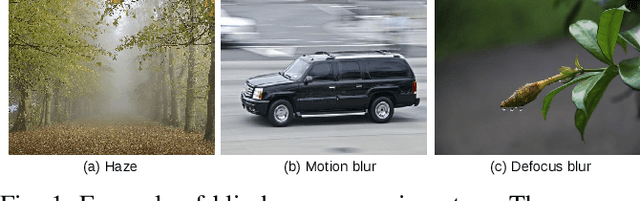

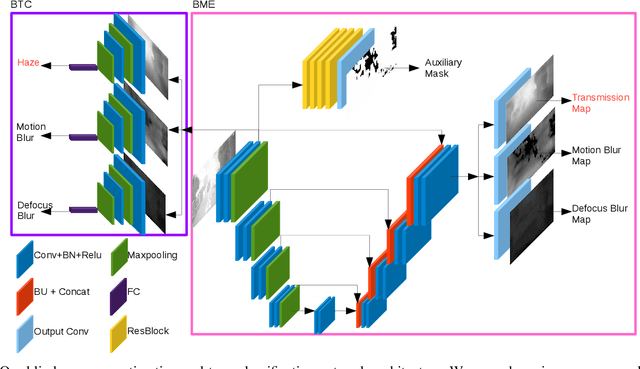

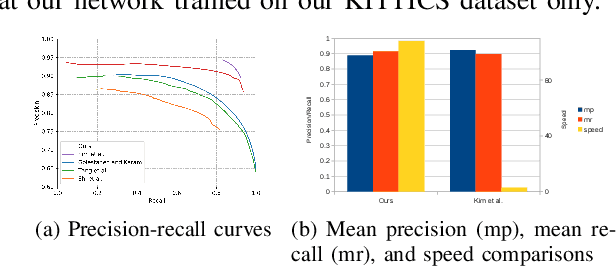

Outdoor vision robotic systems and autonomous cars suffer from many image-quality issues, particularly haze, defocus blur, and motion blur, which we will define generically as "blindness issues". These blindness issues may seriously affect the performance of robotic systems and could lead to unsafe decisions being made. However, existing solutions either focus on one type of blindness only or lack the ability to estimate the degree of blindness accurately. Besides, heavy computation is needed so that these solutions cannot run in real-time on practical systems. In this paper, we provide a method which could simultaneously detect the type of blindness and provide a blindness map indicating to what degree the vision is limited on a pixel-by-pixel basis. Both the blindness type and the estimate of per-pixel blindness are essential for tasks like deblur, dehaze, or the fail-safe functioning of robotic systems. We demonstrate the effectiveness of our approach on the KITTI and CUHK datasets where experiments show that our method outperforms other state-of-the-art approaches, achieving speeds of about 130 frames per second (fps).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge