D-PAGE: Diverse Paraphrase Generation

Paper and Code

Aug 13, 2018

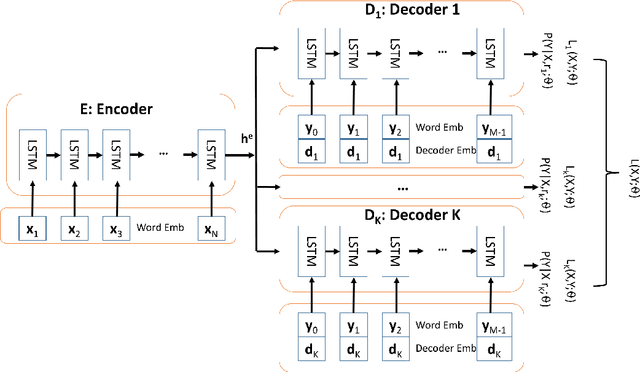

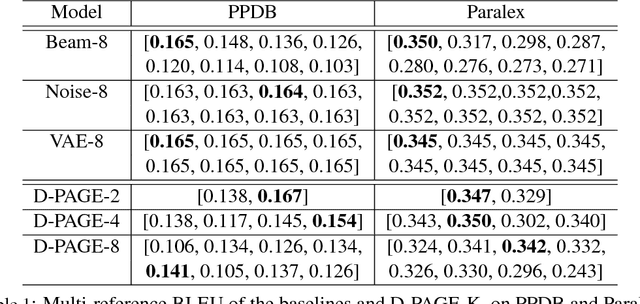

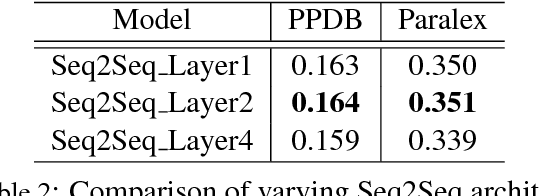

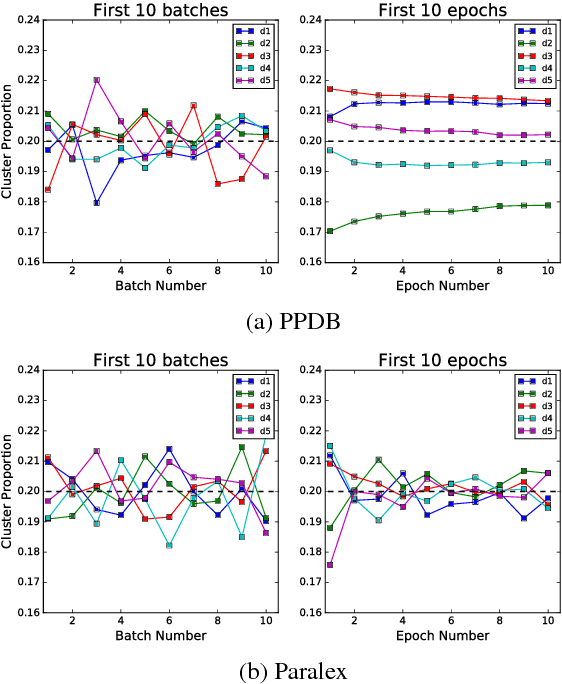

In this paper, we investigate the diversity aspect of paraphrase generation. Prior deep learning models employ either decoding methods or add random input noise for varying outputs. We propose a simple method Diverse Paraphrase Generation (D-PAGE), which extends neural machine translation (NMT) models to support the generation of diverse paraphrases with implicit rewriting patterns. Our experimental results on two real-world benchmark datasets demonstrate that our model generates at least one order of magnitude more diverse outputs than the baselines in terms of a new evaluation metric Jeffrey's Divergence. We have also conducted extensive experiments to understand various properties of our model with a focus on diversity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge