Counterfactual VQA: A Cause-Effect Look at Language Bias

Paper and Code

Jun 15, 2020

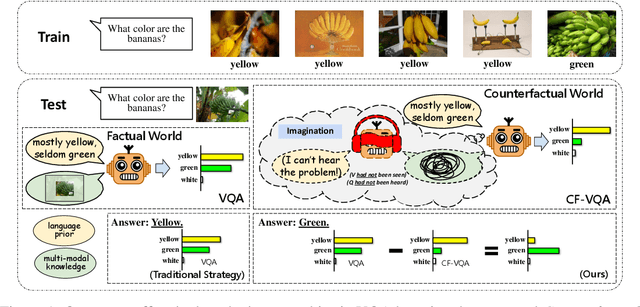

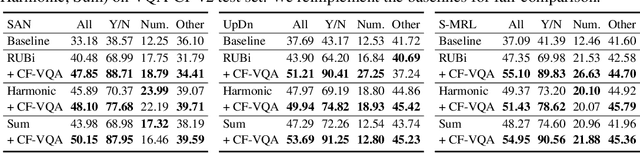

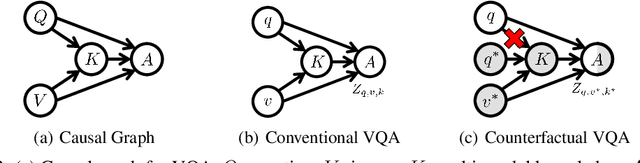

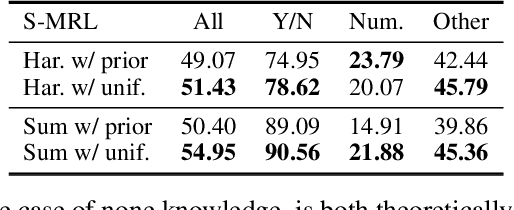

Visual Question Answering (VQA) models tend to rely on the language bias and thus fail to learn the reasoning from visual knowledge, which is however the original intention of VQA. In this paper, we propose a novel cause-effect look at the language bias, where the bias is formulated as the direct effect of question on answer from the view of causal inference. The effect can be captured by counterfactual VQA, where the image had not existed in an imagined scenario. Our proposed cause-effect look 1) is general to any baseline VQA architecture, 2) achieves significant improvement on the language-bias sensitive VQA-CP dataset, and 3) fills the theoretical gap in recent language prior based works.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge