Convolutional Neural Networks with Dynamic Regularization

Paper and Code

Sep 26, 2019

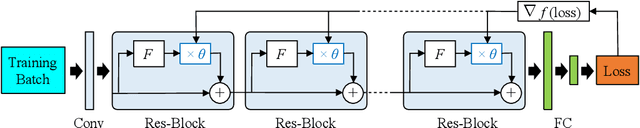

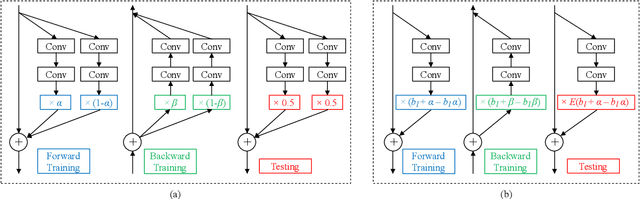

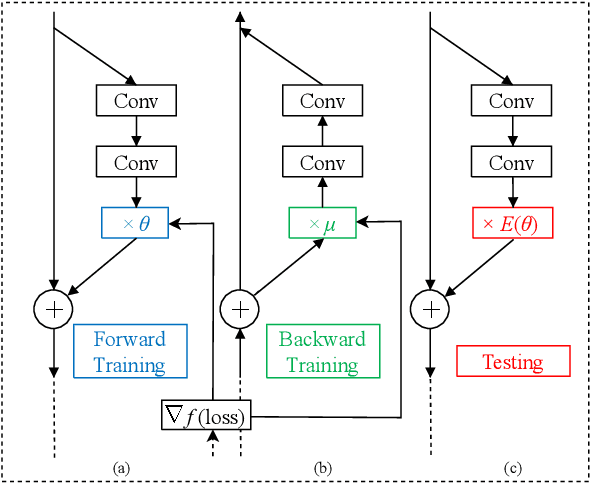

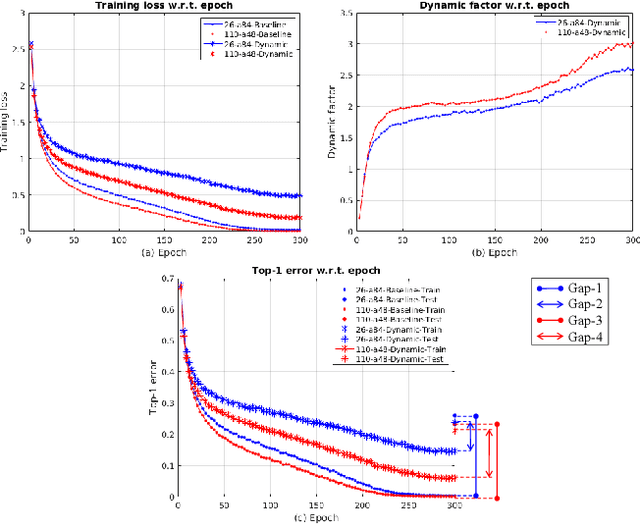

Regularization is commonly used in machine learning for alleviating overfitting. In convolutional neural networks, regularization methods, such as Dropout and Shake-Shake, have been proposed to improve the generalization performance. However, these methods are lack of self-adaption throughout training, i.e., the regularization strength is fixed to a predefined schedule, and manual adjustment has to be performed to adapt to various network architectures. In this paper, we propose a dynamic regularization method which can dynamically adjust the regularization strength in the training procedure. Specifically, we model the regularization strength as a backward difference of the training loss, which can be directly extracted in each training iteration. With dynamic regularization, the large model is regularized by the strong perturbation and vice versa. Experimental results show that the proposed method can improve the generalization capability of off-the-shelf network architectures and outperforms state-of-the-art regularization methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge