Convolutional neural networks on irregular domains based on approximate vertex-domain translations

Paper and Code

Nov 05, 2018

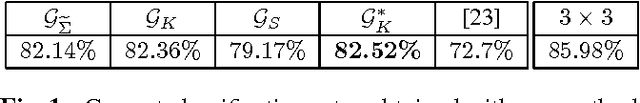

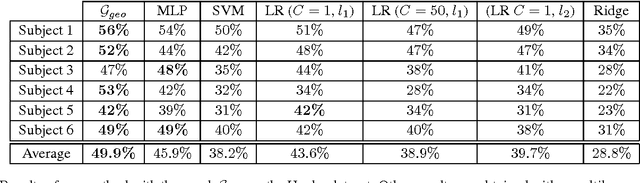

We propose a generalization of convolutional neural networks (CNNs) to irregular domains, through the use of a translation operator on a graph structure. In regular settings such as images, convolutional layers are designed by translating a convolutional kernel over all pixels, thus enforcing translation equivariance. In the case of general graphs however, translation is not a well-defined operation, which makes shifting a convolutional kernel not straightforward. In this article, we introduce a methodology to allow the design of convolutional layers that are adapted to signals evolving on irregular topologies, even in the absence of a natural translation. Using the designed layers, we build a CNN that we train using the initial set of signals. Contrary to other approaches that aim at extending CNNs to irregular domains, we incorporate the classical settings of CNNs for 2D signals as a particular case of our approach. Designing convolutional layers in the vertex domain directly implies weight sharing, which in other approaches is generally estimated a posteriori using heuristics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge