Controlled Dropout for Uncertainty Estimation

Paper and Code

May 06, 2022

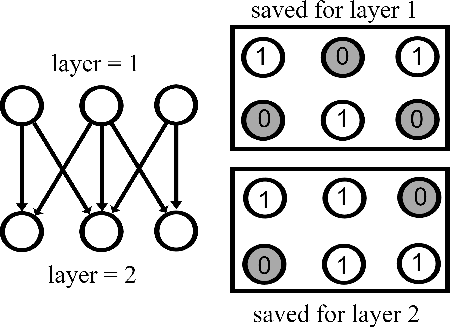

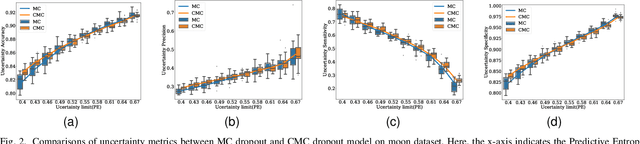

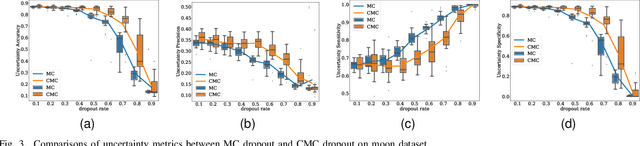

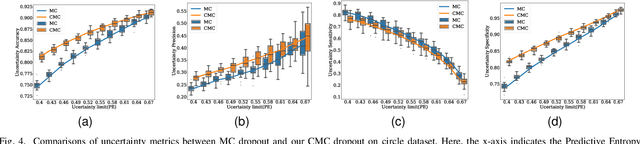

Uncertainty quantification in a neural network is one of the most discussed topics for safety-critical applications. Though Neural Networks (NNs) have achieved state-of-the-art performance for many applications, they still provide unreliable point predictions, which lack information about uncertainty estimates. Among various methods to enable neural networks to estimate uncertainty, Monte Carlo (MC) dropout has gained much popularity in a short period due to its simplicity. In this study, we present a new version of the traditional dropout layer where we are able to fix the number of dropout configurations. As such, each layer can take and apply the new dropout layer in the MC method to quantify the uncertainty associated with NN predictions. We conduct experiments on both toy and realistic datasets and compare the results with the MC method using the traditional dropout layer. Performance analysis utilizing uncertainty evaluation metrics corroborates that our dropout layer offers better performance in most cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge