Contrastive learning for unsupervised medical image clustering and reconstruction

Paper and Code

Sep 24, 2022

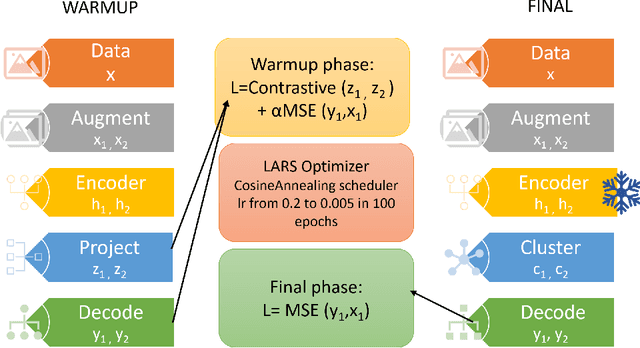

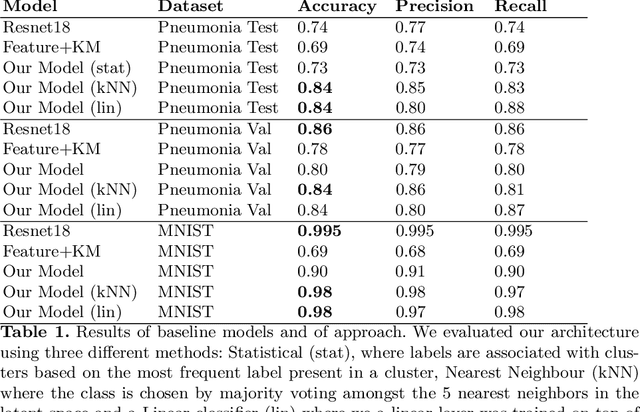

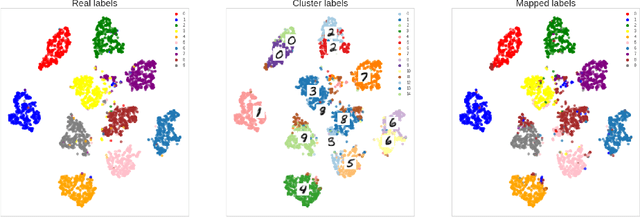

The lack of large labeled medical imaging datasets, along with significant inter-individual variability compared to clinically established disease classes, poses significant challenges in exploiting medical imaging information in a precision medicine paradigm, where in principle dense patient-specific data can be employed to formulate individual predictions and/or stratify patients into finer-grained groups which may follow more homogeneous trajectories and therefore empower clinical trials. In order to efficiently explore the effective degrees of freedom underlying variability in medical images in an unsupervised manner, in this work we propose an unsupervised autoencoder framework which is augmented with a contrastive loss to encourage high separability in the latent space. The model is validated on (medical) benchmark datasets. As cluster labels are assigned to each example according to cluster assignments, we compare performance with a supervised transfer learning baseline. Our method achieves similar performance to the supervised architecture, indicating that separation in the latent space reproduces expert medical observer-assigned labels. The proposed method could be beneficial for patient stratification, exploring new subdivisions of larger classes or pathological continua or, due to its sampling abilities in a variation setting, data augmentation in medical image processing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge