ConTraKG: Contrastive-based Transfer Learning for Visual Object Recognition using Knowledge Graphs

Paper and Code

Feb 17, 2021

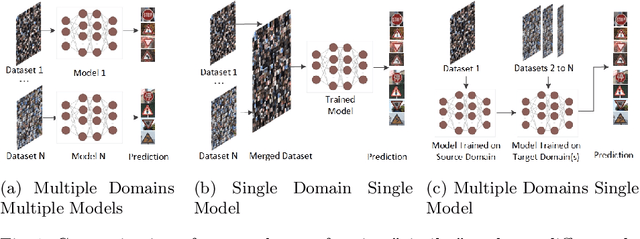

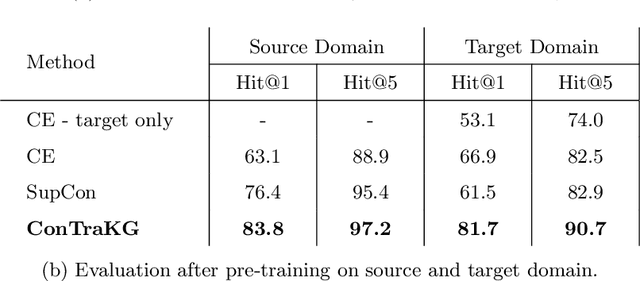

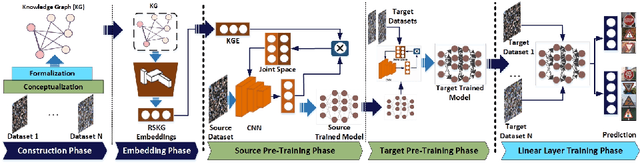

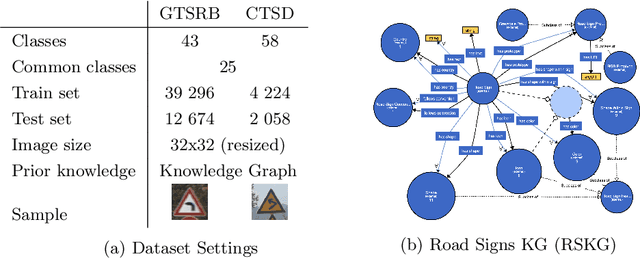

Deep learning techniques achieve high accuracy in computer vision tasks. However, their accuracy suffers considerably when they face a domain change, i.e., as soon as they are used in a domain that differs from their training domain. For example, a road sign recognition model trained to recognize road signs in Germany performs poorly in countries with different road sign standards like China. We propose ConTraKG, a neuro-symbolic approach that enables cross-domain transfer learning based on prior knowledge about the domain or context. A knowledge graph serves as a medium for encoding such prior knowledge, which is then transformed into a dense vector representation via embedding methods. Using a five-phase training pipeline, we train the deep neural network to adjust its visual embedding space according to the domain-invariant embedding space of the knowledge graph based on a contrastive loss function. This allows the neural network to incorporate training data from different target domains that are already represented in the knowledge graph. We conduct a series of empirical evaluations to determine the accuracy of our approach. The results show that ConTraKG is significantly more accurate than the conventional approach for dealing with domain changes. In a transfer learning setup, where the network is trained on both domains, ConTraKG achieves 21% higher accuracy when tested on the source domain and 15% when tested on the target domain compared to the standard approach. Moreover, with only 10% of the target data for training, it achieves the same accuracy as the cross-entropy-based model trained on the full target data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge