Connecting the Dots: A Knowledgeable Path Generator for Commonsense Question Answering

Paper and Code

May 02, 2020

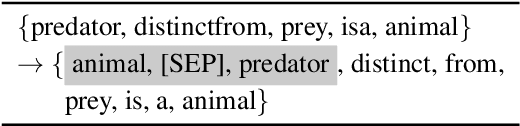

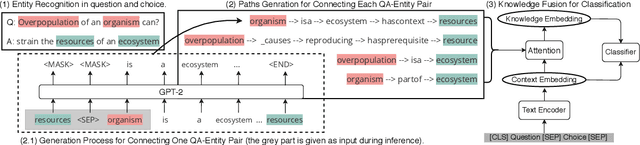

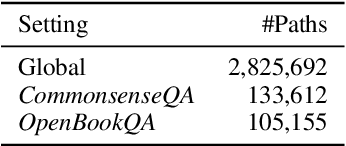

Commonsense question answering (QA) requires the modeling of general background knowledge about how the world operates and how entities interact with each other. Prior works leveraged manually curated commonsense knowledge graphs to help commonsense reasoning and demonstrated their effectiveness. However, these knowledge graphs are incomplete and thus may not contain the necessary knowledge for answering the questions. In this paper, we propose to learn a multi-hop knowledge path generator to generate structured evidence dynamically according to the questions. Our generator uses a pre-trained language model as the backbone, leveraging a large amount of unstructured knowledge stored in the language model to supplement the incompleteness of the knowledge base. The experiments on two commonsense QA datasets demonstrate the effectiveness of our method, which improves over strong baselines significantly and also provides human interpretable explanations for the predictions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge