Confidence Intervals for Policy Evaluation in Adaptive Experiments

Paper and Code

Nov 07, 2019

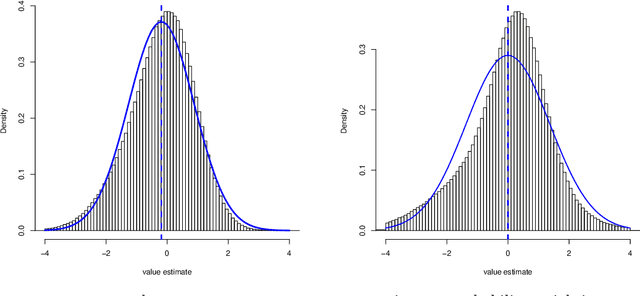

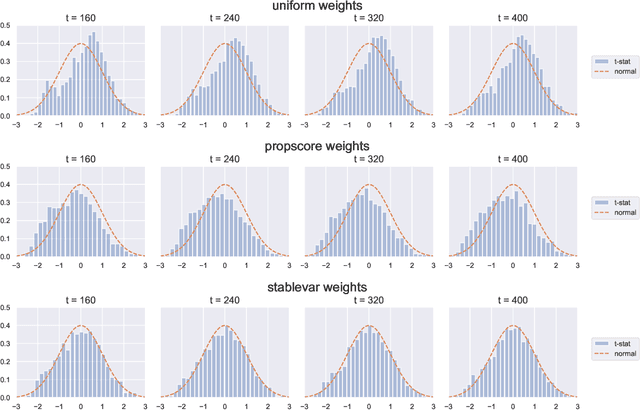

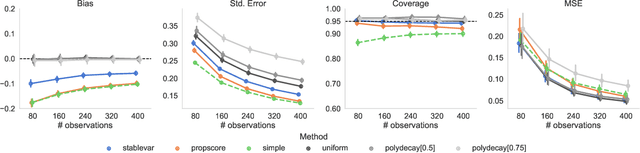

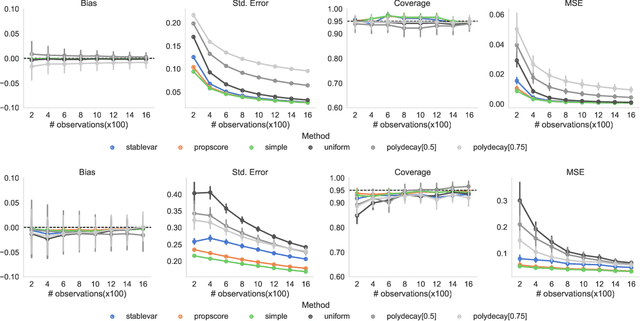

Adaptive experiments can result in considerable cost savings in multi-armed trials by enabling analysts to quickly focus on the most promising alternatives. Most existing work on adaptive experiments (which include multi-armed bandits) has focused maximizing the speed at which the analyst can identify the optimal arm and/or minimizing the number of draws from sub-optimal arms. In many scientific settings, however, it is not only of interest to identify the optimal arm, but also to perform a statistical analysis of the data collected from the experiment. Naive approaches to statistical inference with adaptive inference fail because many commonly used statistics (such as sample means or inverse propensity weighting) do not have an asymptotically Gaussian limiting distribution centered on the estimate, and so confidence intervals constructed from these statistics do not have correct coverage. But, as shown in this paper, carefully designed data-adaptive weighting schemes can be used to overcome this issue and restore a relevant central limit theorem, enabling hypothesis testing. We validate the accuracy of the resulting confidence intervals in numerical experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge