Confidence-Guided Learning Process for Continuous Classification of Time Series

Paper and Code

Aug 14, 2022

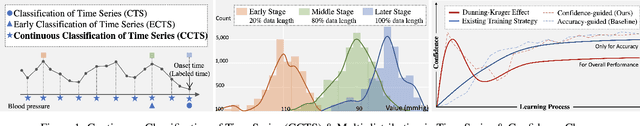

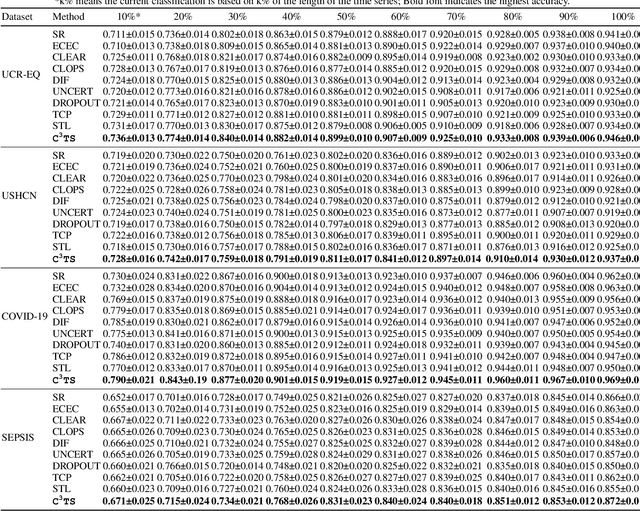

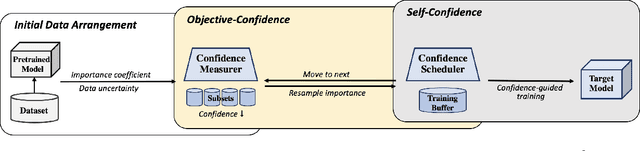

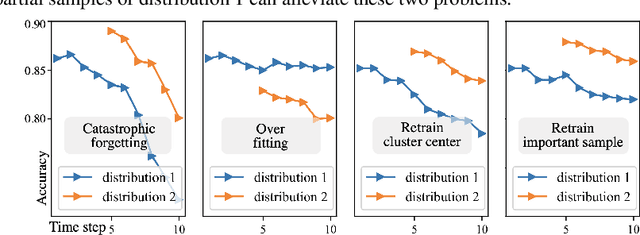

In the real world, the class of a time series is usually labeled at the final time, but many applications require to classify time series at every time point. e.g. the outcome of a critical patient is only determined at the end, but he should be diagnosed at all times for timely treatment. Thus, we propose a new concept: Continuous Classification of Time Series (CCTS). It requires the model to learn data in different time stages. But the time series evolves dynamically, leading to different data distributions. When a model learns multi-distribution, it always forgets or overfits. We suggest that meaningful learning scheduling is potential due to an interesting observation: Measured by confidence, the process of model learning multiple distributions is similar to the process of human learning multiple knowledge. Thus, we propose a novel Confidence-guided method for CCTS (C3TS). It can imitate the alternating human confidence described by the Dunning-Kruger Effect. We define the objective- confidence to arrange data, and the self-confidence to control the learning duration. Experiments on four real-world datasets show that C3TS is more accurate than all baselines for CCTS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge