Computation Offloading in Beyond 5G Networks: A Distributed Learning Framework and Applications

Paper and Code

Jul 15, 2020

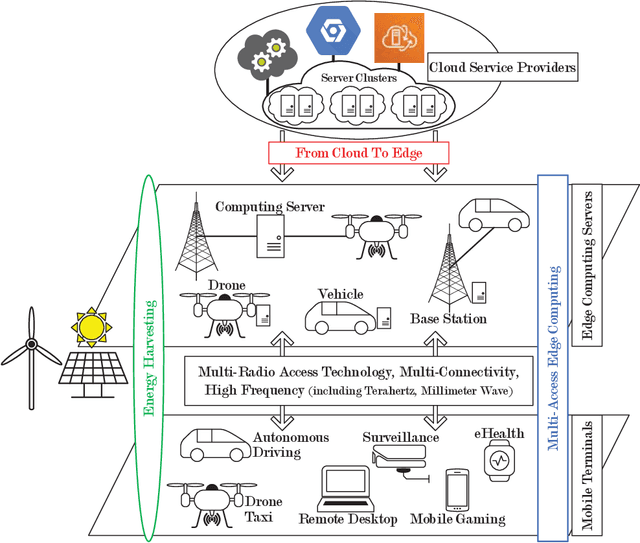

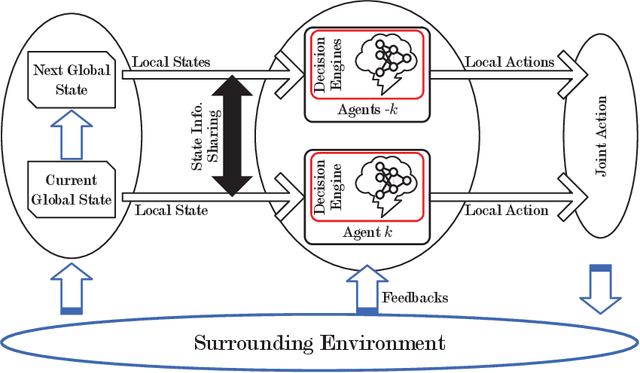

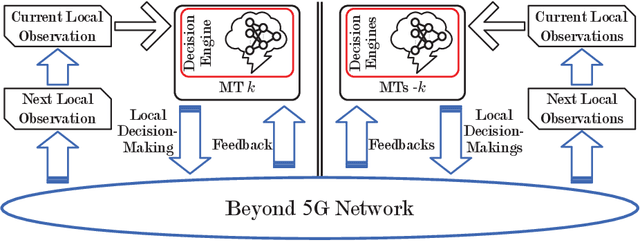

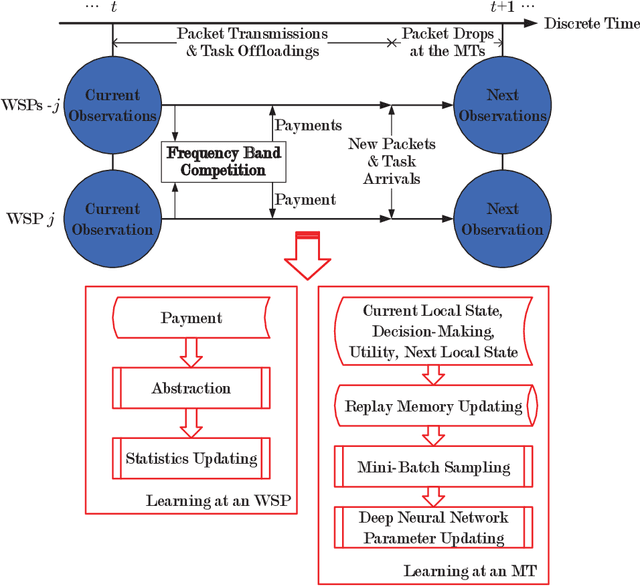

Facing the trend of merging wireless communications and multi-access edge computing (MEC), this article studies computation offloading in the beyond fifth-generation networks. To address the technical challenges originating from the uncertainties and the sharing of limited resource in an MEC system, we formulate the computation offloading problem as a multi-agent Markov decision process, for which a distributed learning framework is proposed. We present a case study on resource orchestration in computation offloading to showcase the potentials of an online distributed reinforcement learning algorithm developed under the proposed framework. Experimental results demonstrate that our learning algorithm outperforms the benchmark resource orchestration algorithms. Furthermore, we outline the research directions worth in-depth investigation to minimize the time cost, which is one of the main practical issues that prevent the implementation of the proposed distributed learning framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge