Compressive Sensing with Low Precision Data Representation: Theory and Applications

Paper and Code

Jun 06, 2018

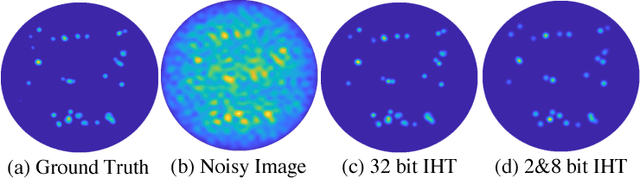

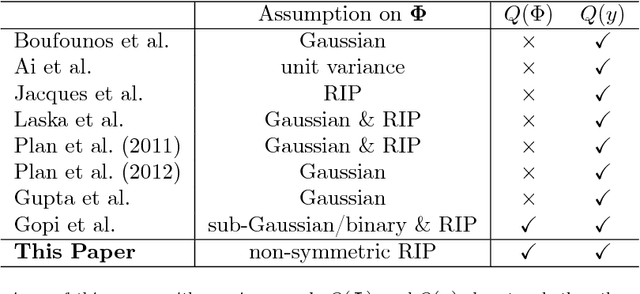

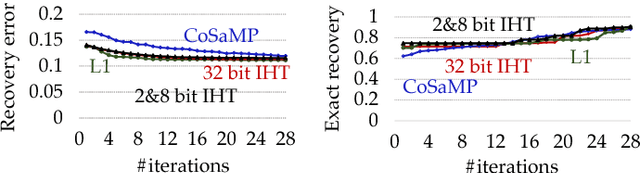

Modern scientific instruments produce vast amounts of data, which can overwhelm the processing ability of computer systems. Lossy compression of data is an intriguing solution, but comes with its own dangers, such as potential signal loss, and the need for careful parameter optimization. In this work, we focus on a setting where this problem is especially acute -compressive sensing frameworks for radio astronomy- and ask: Can the precision of the data representation be lowered for all inputs, with both recovery guarantees and practical performance? Our first contribution is a theoretical analysis of the Iterative Hard Thresholding (IHT) algorithm when all input data, that is, the measurement matrix and the observation, are quantized aggressively to as little as 2 bits per value. Under reasonable constraints, we show that there exists a variant of low precision IHT that can still provide recovery guarantees. The second contribution is an analysis of our general quantized framework tailored to radio astronomy, showing that its conditions are satisfied in this case. We evaluate our approach using CPU and FPGA implementations, and show that it can achieve up to 9.19x speed up with negligible loss of recovery quality, on real telescope data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge